ABA Testing

I’m at the eMetrics Summit in Santa Barbara, and there are lots of cool lessons to be heard, some of which I’ll write about in the following days. But today, I’m writing about lunch.

I didn’t really notice what I *ate*, but I sat with Matt Roche from Offermatica (whom I referenced a few days ago but only met today) and Bill Bruno from Stratigent. During the course of lunch, I asked them both what they thought about ABA testing, which companies sometimes use when they have too few visitors (too little data) to do standard split-path testing.

With ABA, the company does an A/B test with three groups instead of two. They randomly split visitors into three groups. One of them sees the test page (the B group.) The other two groups both see the same control page (Those are the two A groups.) When the first A group has the same conversion rate as the second A group, the company who is running the test decides that it has collected enough data, and then feels that it is in a position to declare either the control or the new test page a winner.

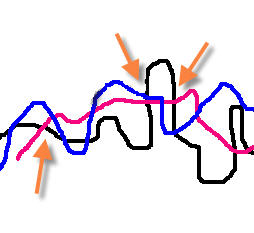

Matt and Bill both dismissed ABA testing as a not-too-great idea. Matt drew a picture on the back of Bill’s business card, showing how data usually presents itself:

It is never very clean, he said, and it doesn’t usually go in a beautiful curve, but it bounces all over the place. So in the above picture, the two “A” tests are in black and red, and the B test is in blue. At which arrow should we say that the two A tests are the same — the first one, where the blue is a winner, the second one, where they are all tied, or the third one, where the blue line is the loser?