Using ALL Of Google Webmaster Tools Data To Tackle (not Provided)

I couldn’t believe it when I saw the January 7, 2014th Webmaster Tools update,

“data in the search queries feature will no longer be rounded / bucketed.”

At first I thought, why would Google go through all that trouble to obfuscate keyword data in Google Analytics, when they planned on handing all that data back through the search query reports in Webmaster Tools? And of course, they didn’t plan on anything of the sort. The relatively minor update only removes bucketing, and does not address the big issue, that they display only 20% to 25% of search query data. I held out hope that, as it appears in the before and after pictures, the sampling rate had been increased from around 20% to around 35%. But while I’ve noticed small changes in some accounts, it does not appear they’ve made this improvement.

So, how much of a boon IS the retraction of bucketing in GWT’s search queries? There definitely isn’t anyone complaining. It’s great to no longer see “<10 clicks” for our long tail queries. Of course, the biggest cost of (not provided) to the digital marketing community is the new-found powerlessness to relate search intent with landing page and overall site performance. While much energy and creativity is channeled towards addressing this issue with third party tools, I believe there is yet untapped insight inside Google Webmaster Tools.

Patch Analytics with Webmaster Tools

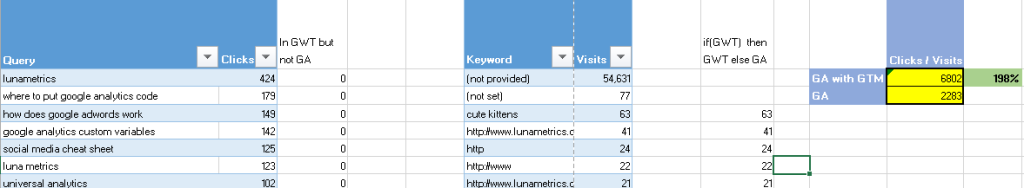

Before we get into the scope of this article, it is worth a shout out to Ben Goodsell who came up with a nice way to beat the bucketing over a year ago. Now that we no longer have to worry about bucketing, we can use an easier variation of his method to combat (not provided). After downloading the organic keyword data from Google Analytics and the search query data from Google Webmaster Tools, I used the latter (now accurate) data to correct the former. I won’t go into the details of my Excel setup, but I included a screenshot below. I can post the setup if there is interest. In this case, we went from 2283 visits with defined keywords in GA to 6802, using the GWT data. Of course when you only start with 4% of your organic visits as not (not provided), a 198% increase is not as impressive. Still, it is better than nothing.

Re-connecting Queries with Landing Pages

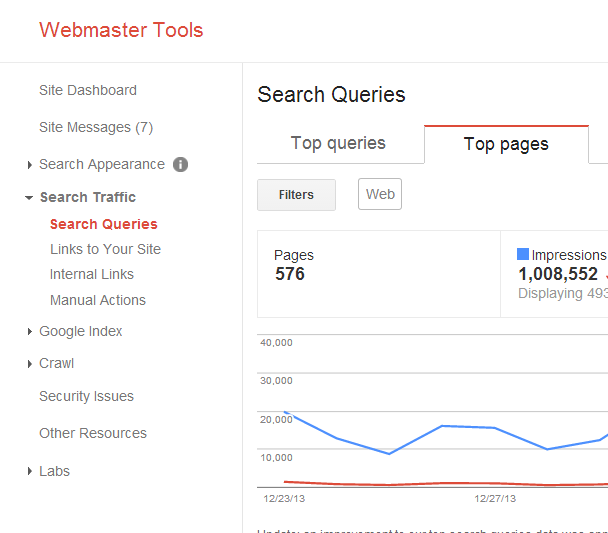

Short of using Google Tag Manager to import optimized keywords to your Google Analytics (which everyone should also do, by the way) Webmaster Tools still provides the last in-house way of connecting search queries with your site content. Below is the Search Query->Top Pages report from GWT

The Top Pages Report in GWT

Notice the number of clicks, circled in green. When I first saw this, I did another impression of the Toronto Raptor, thinking I had discovered a loophole in GWT’s sampling methods. But of course, the ‘displaying 136,552 of 153,511 clicks’ means that nearly 90% of clicks are accounted for in terms of landing page. When you drill down into Keyword by Page, observe that only the standard 20% to 30% of search queries are accounted for. Still pretty neat, though, huh? You can now get an (exact) number of clicks for a given page for any search queries that made it past Google’s sampling method. What could we do with that data? Well it would be great to export it, play around with it, and see what types of additional insights we can draw. Which brings us to the next point of our review of GWT.

Poor API Support!

The only part of Google Webmaster tools as frustrating as the (former) bucketing and (ongoing) sampling, is the lack of official API support. There is a an official Java API that cannot return search query data; only crawled keywords,crawl issues, etc. And the unofficial APIs that I have seen (PHP and Python) do not support easy OAuth integration, and have only limited support for search queries. Even the Google Analytics integration is lacking. The search query data cannot be combined with any meaningful GA metric, and, to make things worse, the imported data is still being bucketed! So, to access the Search Queries->Top Pages report without any heavy coding, we need to use the GWT user interface.

Search Queries->Top Pages Export

Unlike the standard Top Queries report, we cannot export the complete Top Pages report via the UI. The best we can do is export the summarial table with a breakdown only by pages (and not search queries). We could also technically scroll down the page, expanding each of the links by hand, but that would be painful. I wrote a couple JavaScript functions to automate the process. The code is rough, but it does download ‘page’, ‘search query’, and ‘clicks’ columns for each search query entry, in TSV format for Excel. The code is available from GitHub, and is also included below. I have only used it in Chrome.

Steps to export your Search Query->Top Page report from Google Webmaster Tools:

https://www.google.com/webmasters/tools/top-search-queries? hl=en&siteUrl=http://www.yoursite.com/&de=20140121&db=20131101&more=true&qv=amount &type=urls&prop=WEB®ion&grid.s=719

//expand page entries

(function(){

pages = document.getElementsByClassName('goog-inline-block url-detail');

for(i=0;i<pages.length;i++){

pages[i].setAttribute('href','#');

pages[i].setAttribute('target','');

pages[i].click();

}

})();

- Log into GWT and navigate to Search Traffic->Search Queries->Top Pages.

- Set the grid.s=25 parameter in the URL to however many pages you want to download. You should also order the pages by clicks if you are downloading less than the maximum number of rows.

- Set your desired date range. Up to three months prior is available in GWT. As a side note, it might be a good idea to backup your data every three months.

- Press F12 to open the JavaScript Developer Tools. Select ‘Console’

- First, copy and paste the below JavaScript code into the Developer Tools console. Hit enter. You will be presented with an alert for each page entry in the table that Google is unable to expand. Simply hit enter to cycle through the alerts. When it appears all alerts are done, and all the page entries that Google can access have been expanded, proceed to the next step.

- Second, copy and paste the below JavaScript into the Developer Tools console. Hit enter. As long as your pop-ups are not disabled, you will be prompted to download a TSV with your GWT search queries->page data.

//generate download link

(function(){

//make index for page rows

//getting page rows separate from query rows

//ordering them, storying 2-item array for

//each page row, page path and index in

//table

temp = document.getElementById('grid').children[1].children;

indices = new Array();

tableEntries = Array.prototype.slice.call( temp)

pageTds = document.getElementsByClassName('url-expand-open');

for(i=0;i<pageTds.length;i++){

temp = tableEntries.indexOf(pageTds[i]);

indices.push([temp,pageTds[i].children[0].children[0].text]);

}

pageTds = document.getElementsByClassName('url-expand-closed');

for(i=0;i< pageTds.length;i++){

temp = tableEntries.indexOf(pageTds[i]);

indices.push([temp,pageTds[i].children[0].children[0].text]);

}

indices.sort(function(a,b){return a[0]-b[0]});

// this is complicated. need to mess with with index of

// table rows since the aggregate page listing

// is row just like expanded query rows

for(i=indices.length-1;i> 0;i--){

test = indices[i][0]-indices[i-1][0];

if(test===1){

indices[i-1][1]=indices[i][1];

indices[i][0]++;

}

}

thisCSV = "pagetkeywordtimpressionstclicksn";

queries = document.getElementsByClassName("url-detail-row");

//use count to know when to update the page

//column for the TSV. sorry if convoluted,

//did this quickly not elegantly

count = 0;

for(i=0;i<queries.length;i++){

if(indices[count][0]===i){

thisPage = indices[count][1];

do {

count++;

test = indices[count][0]-indices[count-1][0];

} while(test === 1);

indices[count][0]-=(count);

//because the pages and keywords are all in

//tags, and were counted as the same level in the index

//before

}

thisCSV += thisPage+"t";

l = queries[i].children[0].children.length

if(l > 0) thisCSV+= queries[i].children[0].children[0].text+"t";

else thisCSV+= queries[i].children[0].innerHTML+"t";

thisCSV += queries[i].children[1].children[0].innerHTML+"t";

thisCSV += queries[i].children[3].children[0].innerHTML+"n";

}

//create href and click it as means to save tsv

encodedUri = "data:text/csv;charset=utf-8,"+encodeURI(thisCSV);

link = document.createElement("a");

link.setAttribute("href", encodedUri);

//update name w timestamp if you want

link.setAttribute("download", "GWT_data.tsv");

link.click();

})();

Delving into the Data

Now that we’ve downloaded the data, let’s talk about what we can do with it. Why did we even download it in the first place? Well, as we mentioned in step 3, GWT data is only available for the past three months. If you regularly backup your data, you will have access to more than three months, and may be able to conduct better keyword analysis. In addition to maintaining historical data, we may be able to glean insight by sorting it and comparing to other data sets. I’ll outline how I used Excel for such a project. My approach was to increase the proportion of total data accounted for by the data displayed in Google Webmaster tools, based on the following assumption.

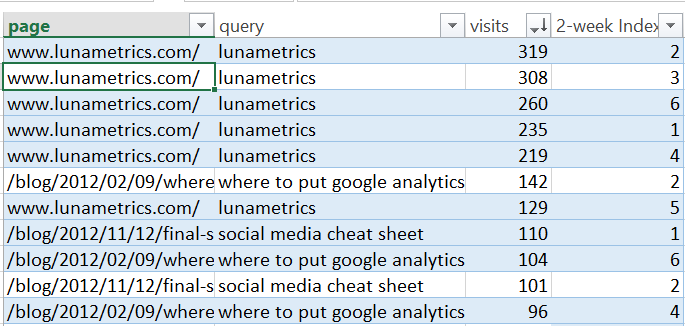

Assumption: the process by which Google filters (chooses which queries are displayed in GWT) is not dependent on the keywords themselves. In other words, while Google might, for example, tend to display less long-tail keywords to us, they are not always blocking the same keywords on a weekly or monthly basis. If the above assumption holds true, we can partition data into weekly or monthly segments, and then estimate clicks for queries that appear in some time segments, but not in others. This technique would be likely be safer when working with monthly data, as there is a better chance the above assumption is met. For sake of demonstration, I download the last three months’ Search Query->Top Pages data and partition it into six two-week segments. After importing into excel, I create a master list, previewed below.

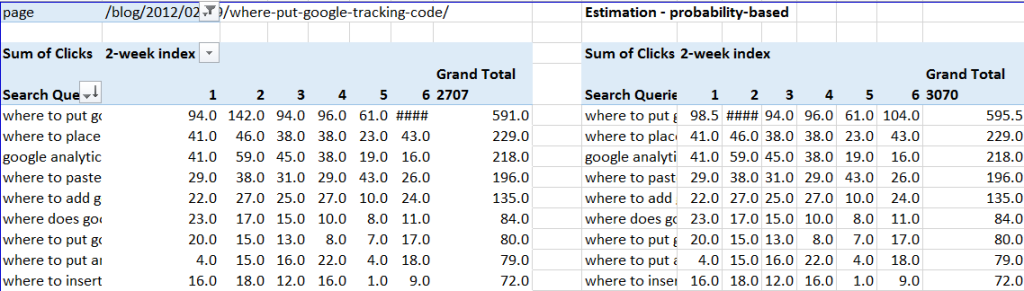

The fourth column is an index that represents the the two-week time period. Next I create a pivot chart with the data, and I am able to display a chart with query parameters as rows and the two-week time periods as columns. The values listed as visits are actually clicks. This method is most applicable to the search queries with a medium-level of clicks. These queries are common enough that they can be expected to be searched every two-weeks or month, but not so common that they need to be regularly included in the GWT reports (or else be conspicuously absent).

Left: Pivot Charts of Page-Level Search Queries. Right: With Missing Clicks Estimated

Results

Using this method, I’ve accounted for 13% more clicks (visits) without introducing new keywords. Further, I’ve only used:

- three months of search query data, and

- a small website with

- quickly changing web pages (the vast majority of landing pages are blog articles).

This method will be even more useful for:

- Those with more than three months historic data

- larger websites

- websites with more-static web pages.

Extensions

- Scale the monthly estimated corrections using aggregate search volume data. This will help to determine whether the absence of a search query is due to filtering by Google or just a lack of interest by searchers.

- Use Dimension Widening to import the refined search query data into Google Analytics, associating it with the landing page dimension.

Assumptions Revisited

I had reasoned that between the two-week periods, there are keywords that are sometimes displayed to us in Google Webmaster Tools, and that are sometimes blocked. For any search queries where some two-week periods have zero clicks, I average how many clicks/two-weeks they received over the three-month period, and assign that value to the given query. While there are certainly legitimate cases where a search query had no clicks for a given week, I reason that the error of wrongly assigning a search query click to a given page is less than the gain netted in terms of better understanding our search queries (and on a page-by-page basis at that!)

And what if Google is consistently hiding the same keywords over the three-month period? I would argue that this would be very hard for Google to achieve while still displaying a relatively consistent percentage of total queries. (what happens if site traffic drops so much on a small web site that Google would be forced to display more than 20% or 30% of keywords?) They probably need to display keywords that have before been classified as hidden, even if they do not admit it.