Using Latent Dirichlet Allocation To Brainstorm New Content

I recently had a problem with my client – I ran out of things to write about. The client, a chimney sweep, has been with our company for 3 years and in that time we have written every article under the sun informing people about chimneys, the issues they cause, potential hazards, and optimal solutions. All of that writing has worked and worked well. We have seen over 100% traffic increases YoY. The challenge now is to keep that momentum.

Brainstorming sessions weren’t working. They looked more like a list of accomplishments than of new ideas. Each new idea seemed like we were slightly changing an already successful article written in the past. I wanted something new and I wanted to make sure it was tied to a strategy. Tell me if this sounds familiar!

So I internalized the problem. I let it smolder and waited for the answer. Then while reflecting on the effects of website architecture and content consolidation, topic modeling popped into my head. If I could scrape the content we’ve already written and throw it into an Latent Dirichlet Allocation (LDA) model I could let the algorithm do the brainstorming for me.

For those of you unfamiliar with Latent Dirichlet Allocation it is:

“a generative model that allows sets of observations to be explained by unobserved groups that explain why some parts of the data are similar. For example, if observations are words collected into documents, it posits that each document is a mixture of a small number of topics and that each word’s creation is attributable to one of the document’s topics.” –Wikipedia

All that basically say is that there are a lot of articles on a website, each of those articles is related to a topic of some sort, by using LDA we can programmatically determine what the main topics of a website are. (If you want to see a great visualization of LDA at work on 100,000 Wikipedia articles, check this out.)

So, by applying LDA to our previously written articles, we can hopefully find areas to write about that will help my client be seen as more authoritative in certain topics.

So I got to researching. The two tools I found which allowed me to quickly test this idea were a content scraper by Kimono and a Topic Modeling Tool I found on code.google.com.

Scrape Content With Kimono

Kimono has an easy to use web application that uses a Chrome extension to train the scraper to pull certain types of data from a page. You are then able to give Kimono a list of URLs that have similar content and have it return a CSV of all the information you need.

Training Kimono is easy; data selection works similar to the magnifying glass feature of many web dev tools. For my purposes I was only interested in the header tag text and body content. (Kimono does much more than this, I recommend you check them out). Kimono’s video about extracting data will give you a better idea of how easy this is. When it’s done Kimono gives you a CSV file you can use in the topic modeling tool.

Compile a Lists of URLs with Screaming Frog

Next I needed a list of URLs for Kimono to scrape. Screaming Frog was the easy solution for this. I had Screaming Frog pull a list of articles from the clients blog, then I plugged those into Kimono. You could also use the page path report from Google Analytics.

Here is what that process looks like:

Map Topics With This GUI Topic Modeling Tool

Many of the topic modeling tools out there require some coding knowledge. However, I was able to find this Topic Modeling Tool housed on code.google.com. The development of this program was funded by the Institute of Museum and Library Services to Yale University, the University of Michigan, and the University of California, Irvine.

The institute’s mission is to create strong libraries and museums that connect people to information and ideas. My mission is to understand how strong my clients content library is and how I can connect them with more people. Perfect match.

Download the program, then:

1. Upload the CSV file from Kimono into the ‘Select Input File or Dir’ field.

2. Select your output directory.

3. Pick the number of topics you would like to have it produce. 10-20 should be fine.

4. If you’re feeling like a badass you can change the advanced settings. More on that below.

5. Click Learn Topics.

Main Topic Modeling Interface

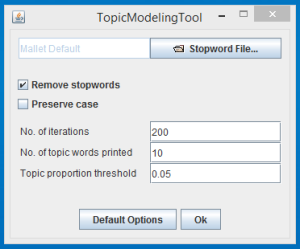

Advanced Settings Interface

Advanced Options

Besides the basic options provided in the first window, there are more advanced parameters that can be set by clicking the Advanced button.

Remove stopwords – If checked, remove a list of “stop words” from the text.

Stopword file – Read “stop words” from a file, one per line. Default is Mallet’s list of standard English stopwords.

Preserve case – If checked, do not force all strings to lowercase.

No. of iterations – The number of iterations of Gibbs sampling to run.

Default is:

– For T500 default iterations = 1000

– Else default iterations = 2*T

Suggestion: Feel free to use the default setting for number of iterations. If you run for more iterations, the topic coherence *may* improve.

No. of topic words printed – The number of most probable words to print for each topic after model estimation. Default is print top-10 words. Typical range is top-10 to top-20 words.

Topic proportion threshold – Do not print topics with proportions less than this threshold value. Good suggested value is 5%. You may want to increase this threshold for shorter documents.

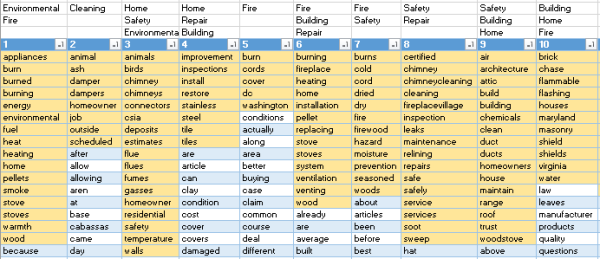

Analyze The Output

The output of this raw data is a list of keywords organized into rows, each row representing a topic. To make analysis easier I transposed these rows into columns. Now I put my marketer hat on and manually highlighted every word in these topics that directly related to services, products, or the industry. That looks something like this:

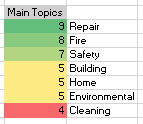

Once I identified the keywords that most closely related to the client’s industry and offering, I eyeballed several themes that theses keywords could fall under. I found themes related to Repair, Fire, Safety, Building, Home, Environmental, and Cleaning.

Once I had this list, I looked back through each topic column and added the themes I felt best matched the words above each LDA topic. That gave me a range at the top of my LDA topics which I could sum using a countif function in Excel. The result is something to the right.

Obviously this last part is far from scientific. The only thing remotely scientific about this is using Latent Dirichlet Allocation to organize words into topics. However it does provide value. This is a real model rooted in math; I used actual blog content not a list of keywords that came from a brainstorming session and Ubersuggest, and with a little intuition I got an idea of the strengths and weaknesses of my clients blog content.

Cleaning is a very important part of what my client does, yet it does not have much of a presence in this analysis. I have my next blog topic!

Something To Consider

LDA and topic modeling have been around for 11 years now and most search related articles about the topic appear between 2010 and 2012. I am unsure why that is as all of my efforts have been put toward testing the model. Moving forward I will be digging a little deeper to make sure this is something worth perusing. If it is, you can expect me to report on a more scientific application, along with results, in the future.