4 Ways To Export Your Google Analytics Data With R

Are you tired of spending half your day copying data out of the Google Analytics interface to update that same old report? Luckily, there are a lot of tools out there to help with this: Google Spreadsheets, Shufflepoint, and Tableau, just to name a few. One of my favorite free tools is R.

Wait, why are we talking about the alphabet?

R is a very powerful program for visualizing and analyzing data. It can also easily access the Google Analytics API. With just a few lines of code, you will have all of your Google Analytics data at your fingertips, ready for your stunning graphics, cutting edge analysis, or just to be dropped in a csv file.

So how do I do this?

Enable the Google Analytics API

First, you need to make sure the Google Analytics API settings are configured correctly. To do this:

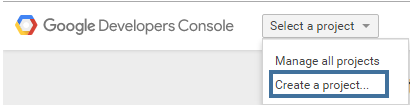

- Go to the Google Developers Console.

- Create a new project.

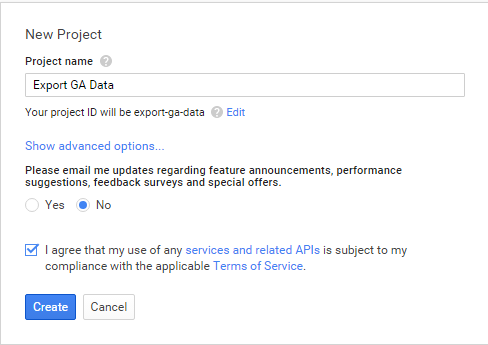

- Give your project a name, agree to the Terms of Service (after reading them of course), and create your project.

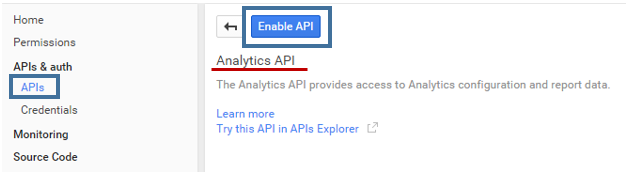

- Now select “APIs” in the left rail.

- Search for “Analytics API” and click on the result.

- If you see a blue button with the words, “Enable API,” click on it. If the button says “Disable API,” the API is already enabled.

Download R and RStudio

Next, you need to download R and RStudio. (RStudio is essentially the user interface for R, so that is what I will refer to from here on out). When installing, choose all of the default options unless you have a reason not to. Once those are finished downloading, open RStudio.

Download a Package for Accessing the API

In addition to R, you need to download an extension for accessing the Google Analytics API. In R lingo, these are called packages – they are basically the R equivalent of an Excel add-in. There are several options, but the package I am going to use is called RGA. To download the package, run the commands:

#install devtools package for downloading packages from github

install.packages("devtools")

library(devtools)

#install curl for easier use

install.packages("curl")

library(curl)

#installing rga package from github

install_github("skardhamar/rga")

Now, we need to tell RStudio that we want to use this package by using the command:

library(rga)

Note that you only have to download RGA once, but every time you open RStudio, you will need to run the library(rga) command to tell RStudio that we want to use that package.

Authenticate

Now you need to authenticate, which is basically telling Google Analytics that you have a right to access this data. Run the command:

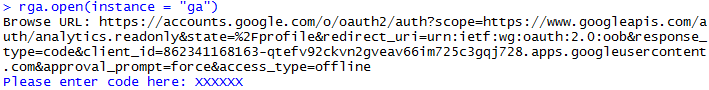

rga.open(instance = "ga")

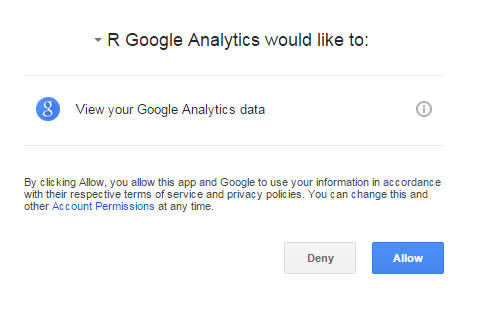

A web browser should pop up asking you to log into Google Analytics (if you aren’t already) and confirm that the application can access your data. Click “Allow”.

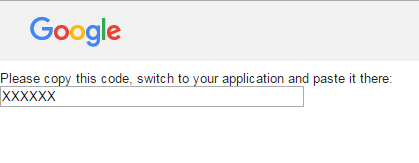

Now you will see a long list of numbers and letters:

Copy this code and paste it into the console in RStudio:

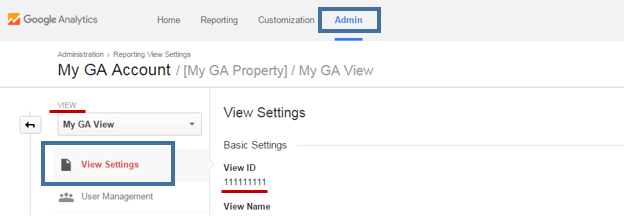

Choose Your View

The Google Analytics API allows you to export data from one View at a time. You need to decide which View you wish to retrieve data from and communicate that to RStudio by passing in the View ID. You can find this information in the Admin section of Google Analytics under View Settings.

Store this information in a variable called id:

id <- "111111111"

Get that Data!

Finally, it’s time to retrieve the data. Try running:

ga$getData(id)

You will see the number of users, sessions, and pageviews to your site during the past week. You can customize this data export by adding additional information to the ga$getData command using the following parameters:

batch: This is automatically set to TRUE. The batch parameter allows you to get around Google’s limit of pulling 10,000 observations per pull.

walk: Setting this to TRUE will allow you to avoid sampling by pulling you Google Analytics data by increments of 1 day. Only use this functionality if it is need though, as using the walk attribute will query the API many times, increasing the time it takes to pull the data as well as using up your API quota. Additionally, if you data is still sampled on the one day level, this functionality will not completely eliminate sampling.

start.date: Start date for your data export. This should be formatted as as.Date("YYYY-MM-DD"), for example, as.Date("2015-07-01").

end.date: End date for your data export. This should be formatted as as.Date("YYYY-MM-DD"), for example, as.Date("2015-07-01").

metrics: A list of the metrics that you wish to pull. You can have up to 10 metrics.

dimensions: A list of the dimensions that you wish to pull. You can have up to 7 dimensions.

sort: (optional) Sort the data by a specific dimension or metric

filters: (optional) Add filters to your data.

segment: (optional) Pull data from a specific segment. This can be either a segment already created in Google Analytics, or you can create it here.

start: (optional) Pull data starting from this row of data

max: (optional) maximum number of results to return

For a more complicated example, pull the total pageviews and entrances for each page, by date, from Sept 1 – Sept 30. Also, filter this data by only organic traffic in the United States and Canada. Finally, sort this data by number of pageviews, descending, and store the result in a variable called gaData.

gaData <- ga$getData(id, start.date = as.Date("2015-09-01"),

end.date=as.Date("2015-09-30"), metrics = "ga:pageviews,ga:entrances",

dimensions = "ga:date", filter = "ga:country=~United States|Canada;ga:medium==organic",

sort = "-ga:pageviews", start = 10)

Our Four Options

Now that we have the data, what should we do with it? Here are four quick options for getting the data out of R and into something we can use.

Export to CSV

This is your easiest option. You only need one line of code:

#write to csv file called My Data Export write.csv(gaData, "My Data Export.csv")

This will write the csv file to your working directory. If you do not know what this is, run the command:

getwd()

You can also explicitly select a file location like this:

#write to csv file called My Data Export to the folder myFolder write.csv(gaData, "C:/myFolder/My Data Export.csv")

Export to Excel

In general, I prefer writing data to a csv rather than to an .xlsx file. However, given the prominence of Excel in the business world, knowing how to read and write directly to .xlsx files is a huge time saver. Fortunately, there a number of great R packages to help us do this. I generally use xlsx.

Before you download the xlsx package, make sure that you have the latest version of Java Development Kit (jdk). Then run this code to install and load xlsx:

#install and load the xlsx package

install.packages("xlsx")

library(xlsx)

Now you can write your data to a workbook called My Data Export.xlsx and a sheet called Data.

#create a new workbook wb <- createWorkbook() #create a sheet named Data sheet <- createSheet(wb, sheetName = "Data") #add your data to the Data sheet addDataFrame(gaData, sheet, row.names = FALSE) #don't forget to save your workbook #this will save to your working directory saveWorkbook(wb, "My Data Export.xlsx")

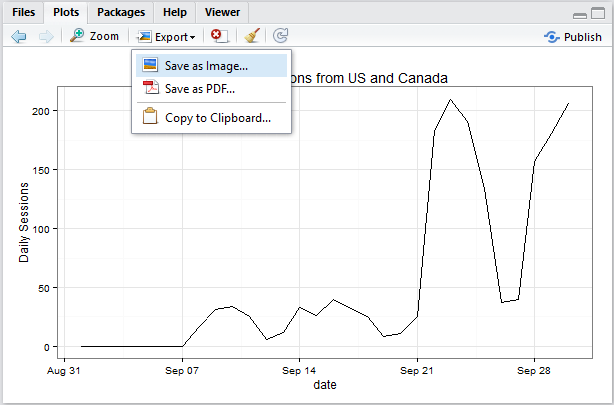

Export via a graphic

R is well known and loved in the statistical community for its ability to create powerful visualizations. There are a number of packages out there to help with this but ggplot is possibly the most well-known and loved. Here is some code to create a time series plot for organic sessions from the United States and Canada.

# install ggplot2 if you do not already have it

install.packages("ggplot2")

#load ggplot

library(ggplot2)

#pull GA data by date only

gaData <- ga$getData(id, start.date = as.Date("2015-09-01"),

end.date=as.Date("2015-09-30"), metrics = "ga:sessions",

dimensions = "ga:date", filter = "ga:country=~United States|Canada;ga:medium==organic")

#plot the data

ggplot(gaData, aes(date, sessions)) + geom_line() +

ylab("Daily Sessions") + theme_bw() +

ggtitle("Organic Sessions from US and Canada")

This will create a graph in RStudio that you can export as an image or a PDF.

Export to a database via an ODBC connection

R has a lot of support for connecting to databases. In particular, RODBC is a powerful, easy to use package for connecting to a database via an ODBC connection. This can help you integrate with Access databases on your local machine, company-wide data warehouses, and everything in between.

The following code will write your GA data into an Access Database. (You will first need to check that you have an ODBC connection set up to your database.

#install and load RODBC package - only needs to be run once

install.packages("RODBC")

#need to run this every time

library(RODBC)

#connect to an ODBC connection with a data source name of MS Access Database GA

channel <- odbcConnect("MS Access Database GA")

#save the data in a new table called GA_DATA_TABLE

sqlSave(channel, gaData, "GA_DATA_TABLE")There are also additional options for appending the data into an existing table or for changing the variable types.

And so much more!

One of R’s great strengths is that it has a huge following of brilliant developers and statisticians. As a result, there are packages for doing almost everything, even the most cutting edge machine learning techniques. If you have a specific reporting need, R probably has a solution. Here are a few more examples to get you started:

Dropbox

Google Docs

LaTex

HTML

Email