Five Practical Uses of the Adobe Experience Platform API

Adobe Experience Platform (AEP) follows the API first design principle, and although they have built a robust user interface on top of the APIs, there are still several tasks that can only be performed using their API. However, due to the breadth of the platform and its API-focused nature, knowing when it's best to use the user interface (UI) vs. the API can be intimidating. Let's look at five practical uses of AEP that can only be done using the API.

Before diving into the specifics, we recommend you follow Adobe's getting started walkthrough.

After completing the getting started walkthrough, you should have:

- A project created in Adobe I/O

- Credentials Created (API Key, Client Secret, JWT)

- Postman Installed

- Adobe API Postman collections imported

- Ability to create

access_tokenusing Postman

Monitoring & Researching Batch Errors

A Customer Data Platform (CDP) is only as good as the data ingested and stored inside and AEP is no exception. The initial setup of the data ingestion is important but equally important is the ongoing ingestion into the platform. As part of your AEP setup, you'll likely have numerous dataflows running on varying schedules and eventually, data ingestion errors will crop up. The UI provides a high level of ingestion errors and it is a good place to start, but to get the full picture and diagnose the exact issue, you will need to use the API.

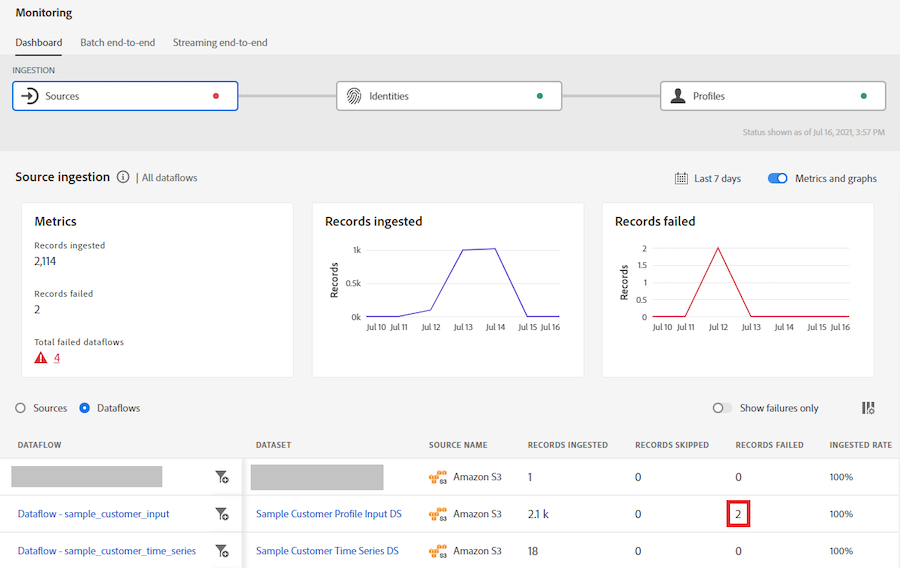

Let's start in AEP UI and navigate to Data Management > Monitoring. You can view the monitoring grouped by Sources or Dataflows. In our example, we're looking at it by Dataflow, and in this example, we have a dataflow with two failed records.

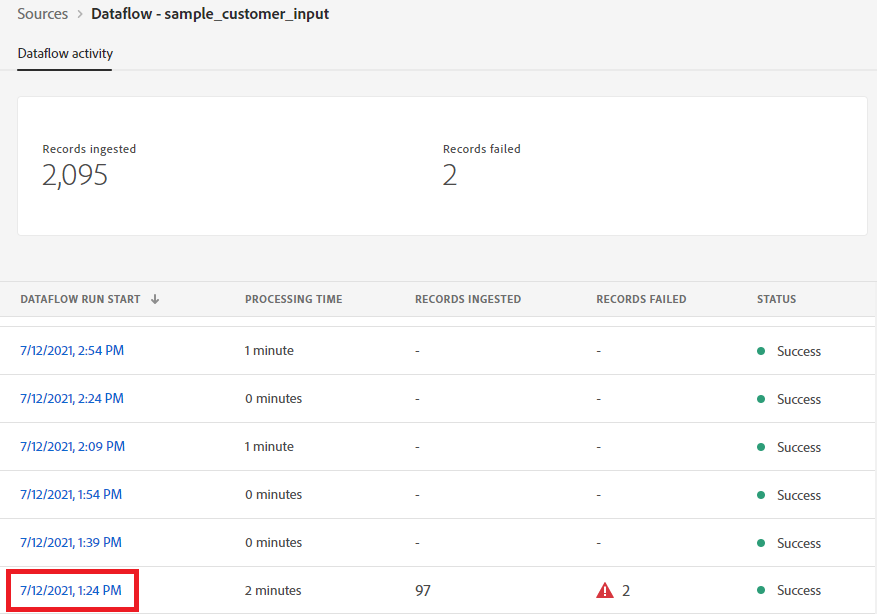

Clicking on the dataflow name takes us to the dataflow and we can see the specific run of the dataflow with the errors.

Once you find the specific run of the dataflow with the errors, click on the timestamp and it will take you to the Dataflow run details.

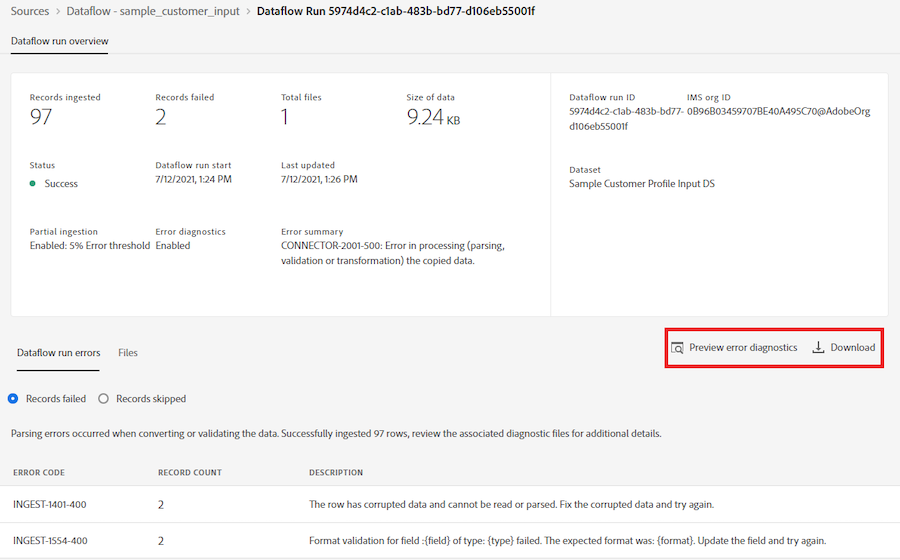

This will give you a summary of the errors encountered, however it does have specifics to get to the root of the issues. Clicking on the Preview error diagnostics gives us this information.

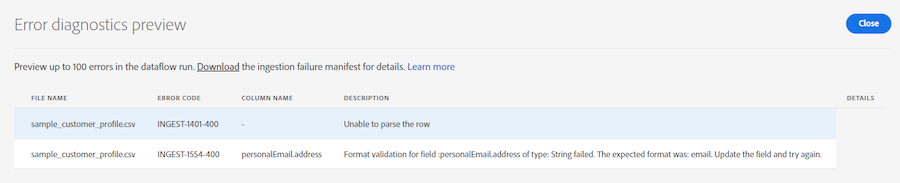

In this case, we had two different types of errors, one of the errors, in this case, is a malformed email address and the other is a parsing issue with few details.

Clicking on the Download link we're presented with an API call using curl. As of writing, AEP does not provide direct access to download the failed records but will steer you to the API instead such as the example below.

curl -X GET https://platform.adobe.io/data/foundation/export/batches/01FADZECRH1MP8KY1C2118D8W5/meta?path=row_errors \

-H 'Authorization: Bearer {ACCESS_TOKEN}' \

-H 'x-api-key: {API_KEY}' \

-H 'x-gw-ims-org-id: {IMS_ORG}' \

-H 'x-sandbox-name: {SANDBOX_NAME}' \Running the curl command gives us a JSON response with href values to different files, but again no actual data.

{

"data": [

{

"name": "conversion_errors_0.json",

"length": "1006787972",

"_links": {

"self": {

"href": "https://platform.adobe.io:443/data/foundation/export/batches/01F8G996Q9VA6MRPQ92WTN95DV/meta?path=row_errors%2Fconversion_errors_0.json"

}

}

},

{

"name": "parsing_errors_0.json",

"length": "1622",

"_links": {

"self": {

"href": "https://platform.adobe.io:443/data/foundation/export/batches/01F8G996Q9VA6MRPQ92WTN95DV/meta?path=row_errors%2Fparsing_errors_0.json"

}

}

}

],

"_page": {

"limit": 100,

"count": 2

}

}To get the exact errors, we run curl again with them using one of the values from the href above and using the -o operator, write that to an output file.

curl -X GET https://platform.adobe.io:443/data/foundation/export/batches/01F8G996Q9VA6MRPQ92WTN95DV/meta?path=row_errors%2Fparsing_errors_0.json \

-H 'Authorization: Bearer {ACCESS_TOKEN}' \

-H 'x-api-key: {API_KEY}' \

-H 'x-gw-ims-org-id: {IMS_ORG}' \

-H 'x-sandbox-name: {SANDBOX_NAME}' \

-o parsing_errors_0.jsonOnce that completes, which might take some time depending on the number of error records, we can see the exact rows that failed and begin triaging the source data issue.

Active Monitoring & Alerting

As shown in the prior example, AEP has a UI for monitoring ongoing data ingestion, but a common use case that comes up with AEP is: How can you proactively monitor without logging into AEP constantly? Adobe provides a few different options to do this.

Insight Metrics API: Summary of Events

The metrics API endpoint can provide summarized information on a variety of events in AEP. The events can be summarized by hour, day, month, etc, so this is best used to get a daily snapshot of information vs. receiving real-time notifications. Let's walk through an example.

Note: there are two versions of this endpoint, V1 is a GET request that accepts parameters as request parameters and V2 is a POST request that accepts input via a JSON body. For this example, we're using V2.

curl POST 'https://platform.adobe.io/data/infrastructure/observability/insights/metrics' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer {ACCESS_TOKEN}'

--header 'x-gw-ims-org-id: {IMS_ORG}' \

--header 'x-api-key: {API_KEY}' \

--header 'x-sandbox-name: {SANDBOX_NAME}' \

--data-raw '{

"start": "2021-07-26T00:00:01.000Z",

"end": "2021-07-27T00:00:00.000Z",

"granularity": "day",

"metrics": [

{

"name": "timeseries.ingestion.dataset.batchsuccess.count",

"aggregator": "sum"

},

{

"name": "timeseries.identity.dataset.recordfailed.count",

"aggregator": "sum"

},

{

"name": "timeseries.profiles.dataset.batchfailed.count",

"aggregator": "sum"

}

]

}'In this example, we've requested three different metric values, the dataset ingestion success count, identity failed count, and profile failed count and all three summed up per day. Below is the response from our sample request.

curl POST 'https://platform.adobe.io/data/infrastructure/observability/insights/metrics' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer {ACCESS_TOKEN}'

--header 'x-gw-ims-org-id: {IMS_ORG}' \

--header 'x-api-key: {API_KEY}' \

--header 'x-sandbox-name: {SANDBOX_NAME}' \

--data-raw '{

"start": "2021-07-26T00:00:01.000Z",

"end": "2021-07-27T00:00:00.000Z",

"granularity": "day",

"metrics": [

{

"name": "timeseries.ingestion.dataset.batchsuccess.count",

"aggregator": "sum"

},

{

"name": "timeseries.identity.dataset.recordfailed.count",

"aggregator": "sum"

},

{

"name": "timeseries.profiles.dataset.batchfailed.count",

"aggregator": "sum"

}

]

}'As you can imagine, there are a lot of possibilities to create custom reporting off of this data. However, it's not real-time and does not give you granular details on exactly which batches have issues that warrant investigating.

Adobe I/O Events: Real-Time Event Stream

Using the metrics endpoint is a great way to retrieve summarized information. However, in addition to the metrics API endpoint, Adobe also has the ability to consume the events in near real-time.

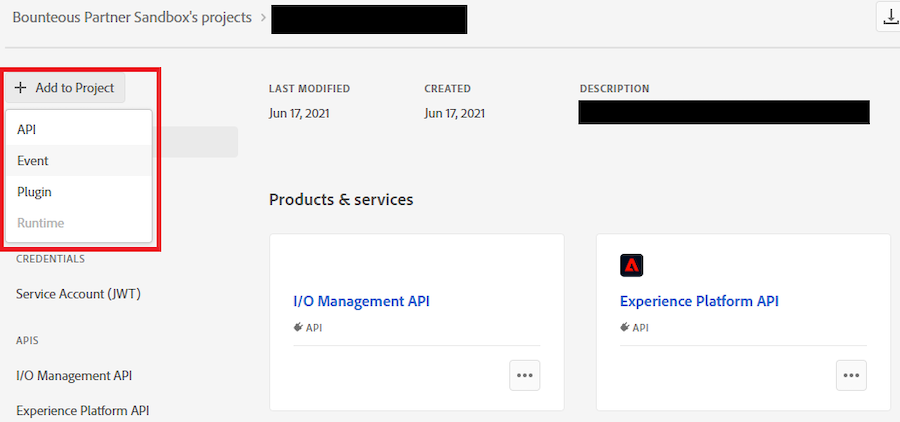

Start by going into the Adobe IO console, and navigate to the project you created in the getting started walkthrough.

Once into your project, click Add to Project.

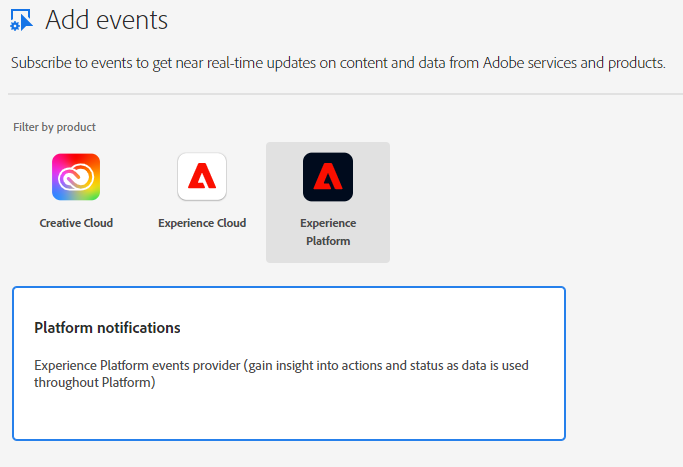

Select Experience Platform, then Platform Notifications.

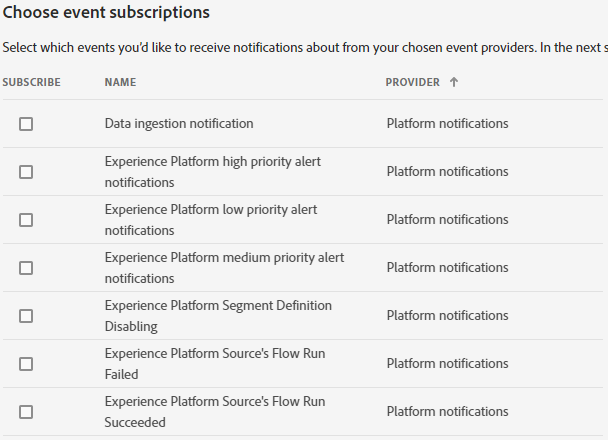

Note that Adobe is constantly adding more notification events to AEP, below is what is available as of writing. Select the events you want to subscribe to and proceed to the next page.

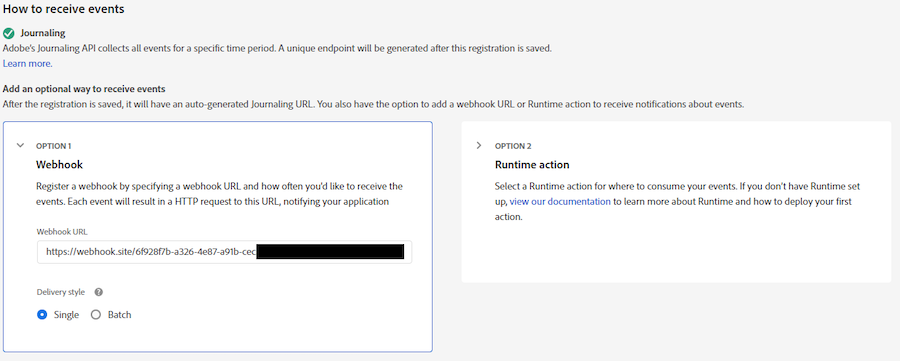

Next, we have a few different endpoints to receive real-time notifications. Adobe provides a Journaling API where you can pull the individual events as a journal of events. You can also provide a Webhook URL for Adobe to push to or create an Adobe Runtime function to consume the events.

For this example, we're going to create a basic webhook using webhook.site, just so we can see some samples of the event data. When you load webhook.site, it will create a unique webhook URL. See the Adobe Experience League documentation for details on setting up a webhook.

Once set up, AEP will begin sending POST requests to the webhook for the subscribed events. Below is a sample of a batch ingestion success event.

{

"event_id": "cc448589-d0b8-407b-9365-d4ab5e1858af",

"event": {

"xdm:ingestionId": "01FCBCZ0AVKG6RK0D5XCGJWZRE",

"xdm:customerIngestionId": "01FCBCZ0AVKG6RK0D5XCGJWZRE",

"xdm:imsOrg": "XXXXXX@AdobeOrg",

"xdm:completed": 1628175316022,

"xdm:datasetId": "60e8a96c93d633194a294bbd",

"xdm:eventCode": "ing_load_success",

"xdm:sandboxName": "development",

"xdm:successfulRecords": 999,

"xdm:failedRecords": 0,

"header": {

"_adobeio": {

"imsOrgId": "XXXXXX@AdobeOrg",

"providerMetadata": "aep_observability_catalog_events",

"eventCode": "platform_event"

}

}

}

}Migrating XDM Schemas

An XDM schema can be created entirely through the UI, but what about deploying that same schema to a higher environment? For example, at the end of the development, you need to migrate the schema to a test or production environment. If you are only using the UI, you would need to tediously re-create the schema. Thankfully, there are endpoints purpose-built for this use case. You can export the schema as a JSON object and re-import that JSON object into another sandbox or entirely different organization.

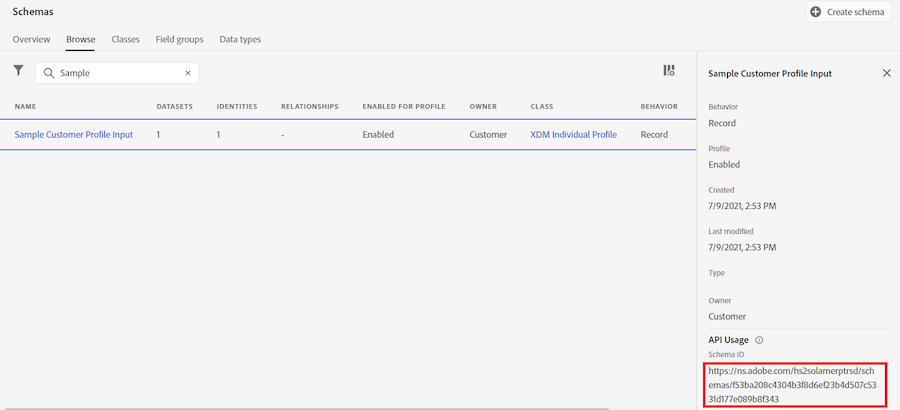

First, let's start with the export endpoint which only requires a resource_id value. For schema, this is a Schema ID which you can find in the UI by navigating to Data Management > Schemas. Search for the schema and then highlight the row. On the right side, it will have details about the schema, and at the very bottom under API Usage it will have the Schema ID.

Note: The Schema ID needs to be URL encoded first. You can use a simple site such as URL Encode online to encode the schema ID.

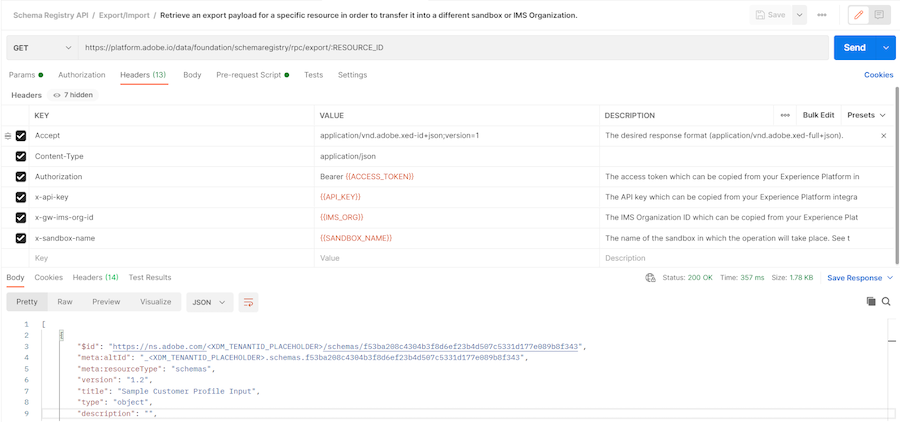

Using the Adobe Postman collections, this endpoint can be found under Schema Registry API > Export/Import > Retrieve an export payload.

Tip: As of writing, the Adobe Postman collection has an incorrect Accept header for the schema export endpoint. The value should be application/vnd.adobe.xed-id+json;version=1.

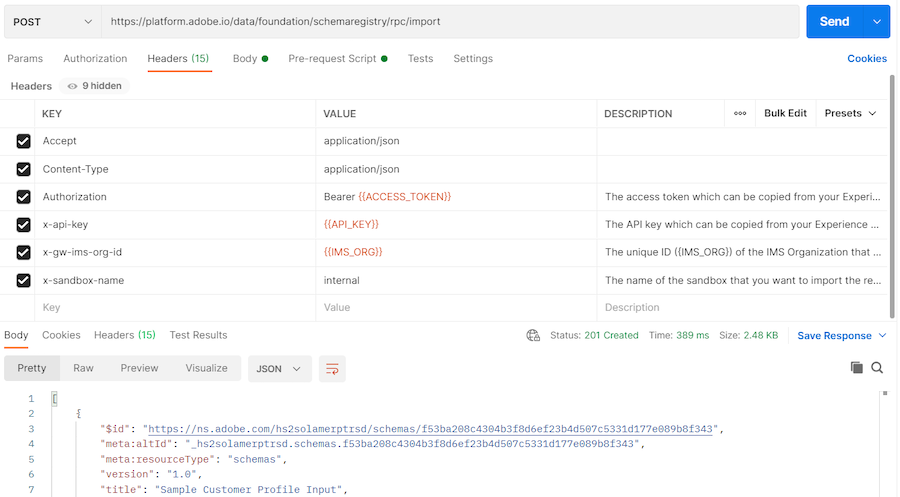

After receiving the response from the export, copy the JSON and paste it into the body of the import endpoint. Remember to change the x-sandbox-name header value to your destination sandbox. The JSON can also be used to import into a different IMS organization, though we find that migrating between sandboxes is the most common use case.

Note, Although this will do a lot of the work for you, it does have limitations you should be aware of:

- It will only create a schema and will not apply incremental changes;

- It will create dependent Field Groups if required, but will not migrate other dependencies, such as new Identity Namespaces;

- It will not automatically enable the schema for profile.

Deleting Profile Data

Generally speaking, AEP is an additive platform which means there are limited options for deleting data once it has been ingested. From the UI, you can delete a batch of data from the underlying data lake, but that will not remove the Profile data. You can also remove the entire dataset using the UI, which will remove all the data from the data lake and related Profiles. However, that will remove all data associated with the dataset (think of it as dropping a table in SQL). Using the API, we have one more option, which is to remove a single profile at a time using either the profile ID or a linked identity value.

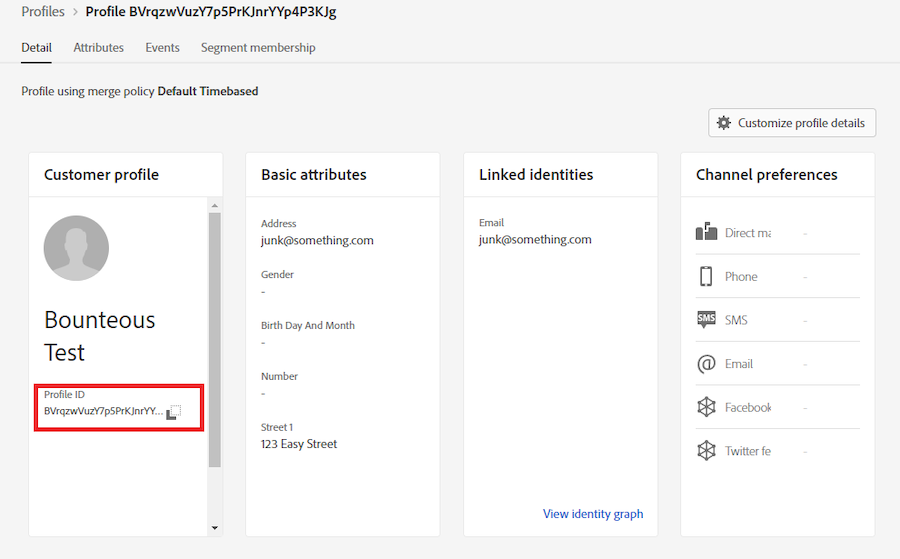

First, we'll start in the UI to look up the specific Profile that we want to delete. What we need from the profile is the "Profile ID" value.

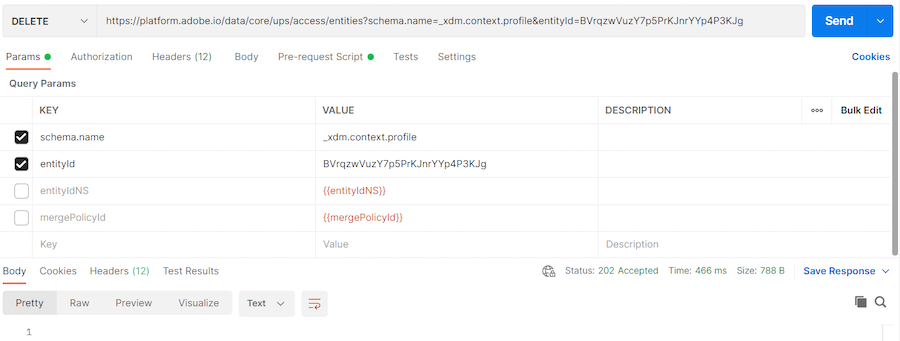

After looking up the Profile ID, we will use that as the entityId parameter to perform the delete. If you are using Adobe Postman collections, you will find this under Real-time Customer Profile API > Entities > Delete an entity by ID and its documentation here.

Pro-tip: It is not specified in the documentation, but the schema.name for this endpoint is the AEP class name. For a profile delete such as this example, the value is _xdm.context.profile.

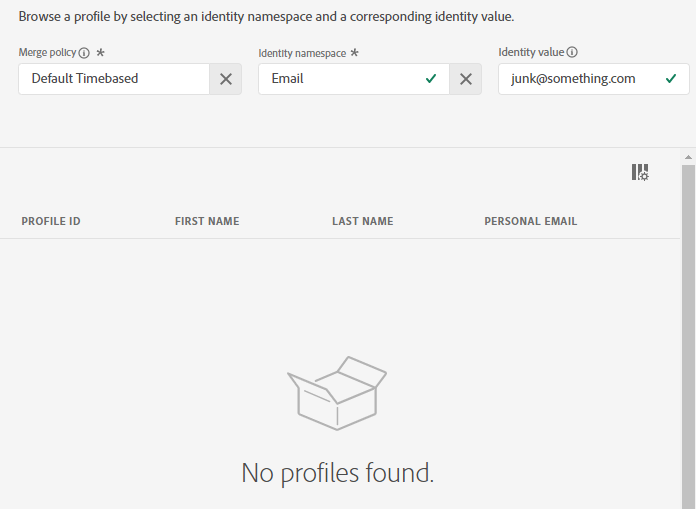

The delete happens immediately, and if we try to look up that profile again with the UI, no profiles will be found.

If deleting using the Profile ID, then only entityId needs to be populated. If deleting using a different identifier, e.g., email, then the entityIdNS (entityId NameSpace) also needs to be specified. For example, entityId = junk@something.com, entityIdNS = email will delete the profile associated with that email address.

Exporting Dataflow Mappings

For custom input sources (e.g., batch ingestion of CRM data), you must create a custom mapping to map the various source formats to the target Schema. For the initial setup, AEP has a UI walkthrough to create the mappings, however, those mappings cannot be exported thus are not shareable. Again, to fill this gap we can turn to the API.

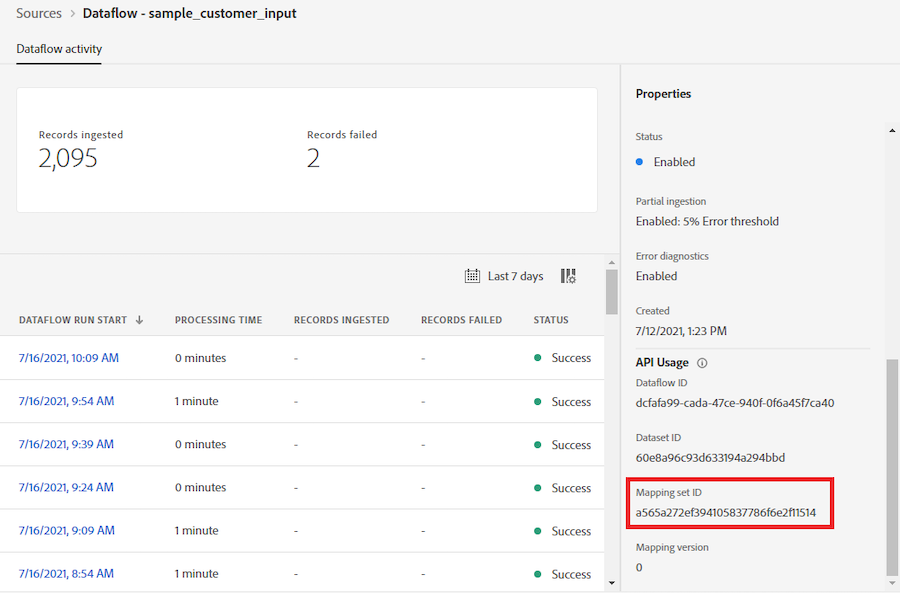

Start in the UI and navigate to the Dataflows section (Sources > Dataflows). Click on the Dataflow name, which should look similar to this.

On the right pane, scroll down to the very bottom and you'll find the API Usage section. What we're looking for here is the Mapping set ID.

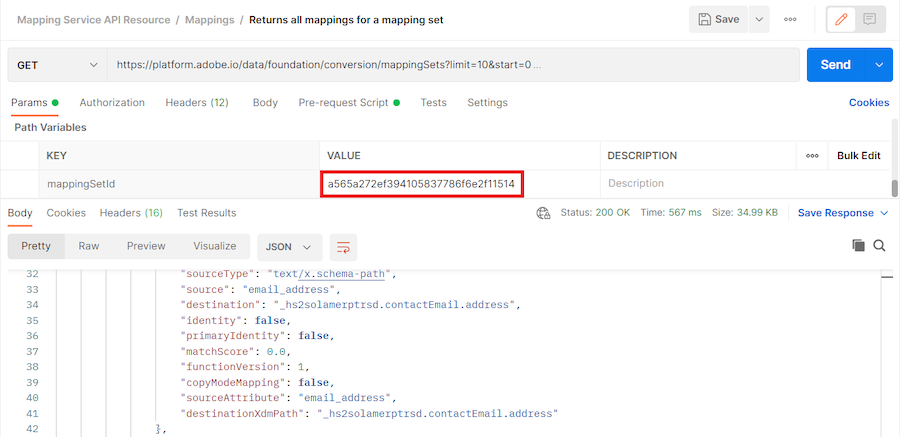

Using the /mappingSets endpoint (Mapping Service API Source > Mappings > Return all mappings for a mapping set, in the Adobe Postman Collections), fill in the Mapping set ID.

The response JSON are source and destination elements, which is our data mapping. From here you can transform the JSON into CSV using your favorite programming language or a number of free online tools.

Help Along Your AEP Journey

Those were five practical uses of the Adobe Experience Platform API. Almost anything that can be done via the user interface can also be accomplished with the API, so knowing when and where to use the API can be a challenge. We hope these five examples help you along your AEP learning journey!