Ideas For Keyword (Not Provided)

If you’re an SEO practitioner, chances are you’ve heard that Google keyword data is likely going to be 100% (not provided). I’d love to provide you with a brilliant solution package to make your the keyword (not provided) headache completely disappear. But, like most industry people (that aren’t completely fooling themselves), I can’t yet.

So here’s 15 ideas on dealing with (not provided) and a bunch of my opinions. Hope it helps. Big thanks to the entire LunaMetrics team for this one.

1. Employing a Rationale Problem-Solving Framework

2. GWT Search Query data

3. AdWords Paid & Organic Report

4. Paid AdWords Data

5. AdWords Keyword Planner Data

6. Google Trends

7. Ask the User

8. Focus on Landing Pages

9. Non-Google Data

10. Provided Data

11. Historical Data

12. Statistical Extrapolation of Samples

13. New Solutions from Google?

14. Triangulating visits via volume, rankings, CTR

15. Paid Data Sources

1. Employing a Rationale Problem-Solving Framework

There’s simply no magic bullet and no single one-size-fits-all solution to solving 100% keyword (not provided). Instead, what we have currently is a very complicated set of many different methods to uncover little gaps in insights left by (not provided) – and each solution you can think of has some deficiency. We also have a ton of hysteria and irrational responses in the search marketing environment amplifying that confusion and noise.

So if that keyword data was an important part of your marketing decisions, it’s essential to think this thing through rationally.

Rand Fishkin did a good job framing the problems associated with the lack of Google keyword data in a Whiteboard Friday Tuesday video.

I provided my own 4-step framework in a Marketingland article that I’ll expand on here…

1. Frame the Problem

First, SEO need to pin down the gaps in actionable insight they need to fill. Ask some deep questions.

What are the decisions you and your clients make that rely on keyword data? What specific metrics and reports do you use to come up the insights required to make said decisions?

Break this down into a set of problems, then prioritize the problems. Then you’ll be on the path to develop the right package of solutions.

For LunaMetrics, for example, it’s incredibly important for us to be able to help clients have accurate and useful KPIs for SEO, because we want them to be able to properly quantify ROI on SEO. We’ve preached a bit on the importance of using organic non-branded traffic for KPI metrics – onb visits and ideally onb conversion data (goal completions, goal completion value, and revenue). We really don’t want to define SEO success solely in terms of rankings or data that can’t help clients determine ROI.

Additionally, knowing conversion rates and engagement on individual keywords helps us understand the value of specific keywords so that we can perform better search query targeting to inform site architecture and content decisions based on giving audience segments what they want.

Those are a few of the things that are very important to us. Figure out what matters to you.

2. Brainstorming

The second thing to do is to brainstorm solutions to your (not provided) problems. Hopefully, this article can help you generate some solid ideas.

3. Vetting Solutions

Next, you’ve got to determine how useful the ideas are. We’re still on that step ourselves – it’s very time-consuming and difficult. There’s 3 factors that’ll make a solution viable:

- The problems it solves. Yeah, duh, right? However, a solution that can solve multiple problems or a really important problem becomes a higher priority. So, the more actionable the insights to be revealed are, and the more robust the solution, the more time you should spend developing and refining said solution.

- Data accuracy. This means you have to perform tests, read the literature, and do whatever you can to prove that a solution is crunching out valid data that you can make sound decisions with before adopting it as a solution.

- Scalability/practicality. The faster you can get insights, and the less labor involved, the better right?

4. Continuous Improvement

SEO practitioners need to keep checking our methods, keep reading the industry literature, and keep looking out for better ideas. The problem is too big to tackle alone. I can’t wait to see what people are coming up with, and how we’re all rising up to this challenge.

2. GWT Search Query data

When I think about the dozens of articles I’ve read and people I’ve talked to about (not provided) solutions, Google Webmaster Tools’ Search Query Data appears to be the clear crowd favorite.

The big insight gap it fills is data on number of clicks from individual keywords to your site.

Unfortunately, the accuracy of the data has been hotly debated. For example, Portent and Distilled both knocked it, while Ben Goodsell felt the data is accurate. I bravely take the position in the middle.

I acknowledge the following limitations:

- you can’t pick the keywords you want to look at.

- The numbers are rounded.

- Only three months of data is shown (supposedly soon to be 12 months worth).

- The tool has had outages (including a recent one) in the past. And there’s nothing guaranteeing it will always be available in the future.

- A click in this report is technically different than a visit in Google Analytics.

Only the top 2,000 queries for your site are shown.Update Nov 11, 2013: I had read the cap was 2,000 queries in a few places, but that must be inaccurate, out of date, and/or I misinterpreted. I’ve seen as many as 17,000+ queries shown via export, and have seen over 2,000 queries shown many times. However, it is clear that not all queries are recorded. For example, the site with 17,000 + GWT queries had over 165,000 keywords in Google Analytics for same time frame (and even that number is too small, since many additional keywords are (not provided)).

However, I think this is probably going to be the single best point of data to gauge the quantity of traffic for individual keywords, and it’s definitely data you should be using.

Further, you can use the GWT API (here’s a Python method and a PHP method to download that data) and some Excel wizardry to automate this solution a bit.

I just wouldn’t put all your stock into this method alone.

3. AdWords Paid & Organic Report

Organic click data is now also shown in a new report inside the AdWords interface.

Popular opinion seems to be that this is the same data used to power GWT, but without the rounding. It’s worth noting that I’ve yet to see Google confirm exactly where this data comes from.

Regardless, it’s certainly worth setting up and looking into. One reason is the potential ability to see historical data for a time-frame greater than that provided in GWT. A second great reason (as explained in a how-to by my sharp co-worker Stephen) is the ability to understand how to improve PPC/SEO synergy.

4. Paid AdWords Data

Of course, AdWords is also a powerful and accurate source on data on how well individual keywords convert. If you are spending anything on AdWords, the time for linking it with Google Analytics and using that data to inform SEO decisions is yesterday.

5. AdWords Keyword Tool Planner Data

AdWords’ Keyword Planner shows keyword volume, and if you examine the estimated volume for your branded terms, you have another data point to help verify validity when estimating your KPI organic non-branded visits.

One caveat here would be that volume only equals total visits if you are capturing 100% of the traffic for searches for the branded term. Another caveat is that, again, clicks is not the same as visits. A third caveat is that you need to know the exact terms used for branded searches.

At the very least, this data point can help verify trending in organic non-branded visits.

6. Google Trends

Speaking of which, Google Trends can also help you verify trending in organic non-branded visits by means of seeing the trends in branded search terms. Note no actual visit data is provided.

7. Ask the User

So how do offline marketers figure out how people are getting to their stores or found their number? They ask them. They always have.

There’s a few ways we could do that on the site, and these could theoretically only be made to apply when source=google or only for certain landing pages. One might be a subtle overlay (or pop-up – even though I hate those) that asks, “How did you find us today? Google – that’s interesting. What search terms did you use?” Or you could ask that question after a user completes a transaction or conversion.

You could also use surveys to ask users (particularly your best segments of users, since you want to figure out how to get more like them) via e-mail. This is totally an old-school, tried-and-true market research technique.

8. Focus on Landing Pages

I spent most of last week at SMX East, and one of the big things I kept hearing about was how much more the SEO community should be focusing on landing pages.

I mean, all the keyword metrics data most obfuscated by (not provided) – conversion rates, per visit value, revenue, bounce rate, time on site, and pages viewed – are still there at the landing page dimension, you know?

Let’s use that data to make the user-experience for inbound users better.

So, as long as you have a general idea on what people are looking for when they land on a given page, and you’re targeting a specific need with specific content on an individual page, chances are you’re going to get some real actionable insight by looking at the metrics for that page. You’ll be able to figure out if you need to make the page better, if the page was targeting the right users, if the content was relevant, if you should promote the page more, and if you should make more pages like it.

All of which are the same actionable insights SEOs often use keyword data for.

Further, you should be mapping your landing pages out by who they are specifically targeting. You can map many elements such as specific known keywords to the pages, ideal keywords you’re targeting, specific user needs or queries the page is targeting. That way, when you examine landing page data, you’ll have a better understanding of how people got to a page.

9. Non-Google Data

Speaking of those engagement and conversion metrics, that’s totally still in there for the other search engines (remember Bing and Yahoo?) – at the keyword level, too – along with visits. And it’s totally really valuable. Look at it. And use that data to try to predict what’s going on with your Google traffic.

10. Provided Data

Another source of the same metrics as #9 is the little bit of data on Google keywords you still have. Use it while you can, and do your best to see how representative that is of overall Google data.

11. Historical Data

And let’s not forget about our historical Google data. That data is still going to be valuable for several months (maybe more in some cases) in determining the per-visit value of keywords, as well as a baseline from which to benchmark future performance.

12. Statistical Extrapolation of Samples

So the big problem with ideas #9, 10, and 11 is that they’re just little pieces of the picture, not the whole thing, right?

Well we can use statistics to assess how well those little pieces represent the big picture.

It’s stuff offline marketers have been doing forever.

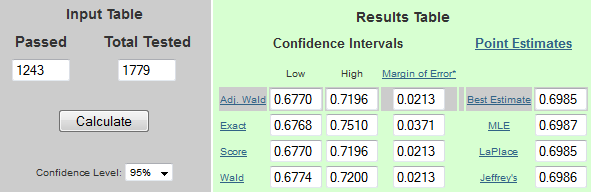

For example, generating confidence intervals for binomial distributions is actually pretty easy. Here’s an online tool for that, in fact. All you need to do is plug two numbers in. Yup, just two.

In fact, many GA metrics – like bounce rate, visits being branded or non-branded, and conversion rates – can be considered binomial distributions (because they must be one of only two things – either a bounce or not a bounce, a branded visit or not, a conversion or not a conversion, etc…).

Using that tool for example, I took a sample of 1779 known (provided) Google visits , and 1243 of them were non-branded. I found that we can be 95% sure (barring sample bias, to be discussed below) that the ratio of non-branded to branded traffic is between 67.7% and 71.96%. Let’s say we have 10,000 total organic visits – then we’re 95% sure (barring sample bias) we scored between 6,770 and 7,196 organic non-branded visits. Not too hard.

Even cooler is that we can theoretically automate this method by using the Google Analytics API and incorporating the data and the number-crunching right in Excel.

Confidence levels and intervals can be crunched out for non-binomial distributions like revenue and avg. time on site as well – it’s just more complex, and I believe it requires every data point in the sample. But theoretically, this can still be practical if one has a solid statistics foundation and API skills.

Sample Bias: The real challenge for estimating Google Analytics data based on a sample is to make sure there is no sample bias – when the sample is not representative of the population. For example, is the proportion of non-branded to total organic visits and conversion for Bing/Yahoo really the same as that for Google? Is your historic rate of conversion for the keyword really the same as it is now? These things need to be checked (probably on every client) before relying on any estimates based on a sample. For example, you can go back before (not provided) and compare Bing/Yahoo organic non-branded visits and conversion rates to that of Google to check on sample bias for that method for those two metrics.

All I know is that – while I’m no statistics guru (although I did take it twice in college ;p) – I realize now that we as an industry really need to start investing a lot more in the use of statistics.

13. New Solutions from Google?

A bunch of the LunaMetricians attended the Google Analytics Summit last week, and the word on the street is that Google Analytics will announce something on their blog this week that will help those hurt by (not provided). That’s all the details we know, so I have no idea how helpful it’ll be – I do know it’s worth keeping an eye on the GA blog for this upcoming post.

14. Triangulating visits via volume, rankings, CTR

Theoretically, if you know 1)how often people search for a keyword, 2)where you rank on the SERPs for that keyword, and 3)he estimated click-thru-rate for your ranking position, you can nail down a good estimate on how many Google visits you’ll get – without Google Webmaster Tools or Google Analytics data.

It seems like a few companies, particularly SEO software providers, are attempting to utilize this method. In practice, however, it gets pretty darned tricky to nail down a good estimate.

First, you have to get the rankings data right – we know that can be easier said than done due to natural fluctuations as well as personalization (including geographic personalization that occurs when you are not signed in). Second, you’ve got to get volume data right, which always has it’s own set of accuracy questions.

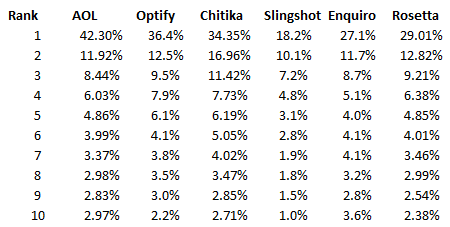

Then you’ve got to get projected click-thru rate right, and this is freaking hard. The baseline for average click-thru rates based on position vary a great deal depending on who you ask, as you can see in this comparison of various ctr/rankings studies.

Even if you have a baseline you trust, then you have to deal with the fact that there’s a million scenarios of SERPs – each of which would have different baselines CTR spreads, largely due to how much real estate is taken up by various paid and organic listings. Below are a few such SERP scenarios:

- SERPs with no ads versus 3 ads of 8 ads

- SERPs with image results

- SERPs with Knowledge Graph results

- SERPs where results have sitelinks

- SERPs with a local carousel

- plain SERPs with nothin’ fancy, etc…

And then, if you can actually manage to account for all that stuff, than you have to deal with variance in the the click-thru-rate attributed to your listing itself. Many factors are at play here too like:

- your brand equity and how that impacts CTR

- any kind of rich snippets in your listing that would increase CTR

- the strength of your Meta description

- how well your listing matches what people are looking for

There’s just sooo many factors at play here that any model relying on estimating CTR would have to be very complex. And I’m highly skeptical that any of the current public solutions out there relying on triangulation of just volume, rankings and CTR are sophisticated enough to be accurate.

However, if you add one or two other data types to the mix, you might get really close. For example, if you are able to see the rankings for the keywords that would account for the majority of the traffic to a landing page, you could factor in the Google visits to that landing page (which is a known quantity) and divvy that up amongst your known keywords based on your triangulated data to get a closer estimate on visits.

And then you could theoretically take this even further by combining with other methods I’ve mentioned for even improved accuracy and insight.

15. Paid Data Sources

There’s a few SEO software providers that already claim to be able to solve the 100% (not provided) riddle, but I have no idea exactly how they’re actually projecting data or even exactly what data points they’re provided – so I can’t speak to accuracy or even how much insight they’ll provide that you can’t figure out yourself.

At SMX, SEOClarity was the loudest voice regarding (not provided). Other software providers claiming a not provided solution include Conductor, BrightEdge, and GShift Labs.

Of course, we already mentioned a certain notorious paid tool called Google AdWords.

SEMRush and SpyFu are two other reasonably priced (starting at around $70-80/month) tools which can estimate organic visits by keyword. I’m more familiar with SpyFu (we use it regularly for competitive intelligence), but I believe both tools use a simple triangulation method (per #14) to project visits by keyword – although neither tool publicly discloses its specific methods to my knowledge. Both tools will offer about 10 keywords for free, so they’re worth a look.

—

Well, I spewed the majority of what’s been in my head regarding (not provided) solutions, but I’m thirsty for more knowledge.

I know we as an industry are still developing better and better solutions, and there’s plenty of people out there with some additional insights and ideas. If you’re one one them, please stand up – drop us a comment or something and clue us in on what you’re working on.

Cheers to nerding out with data.