Understanding Bot And Spider Filtering From Google Analytics

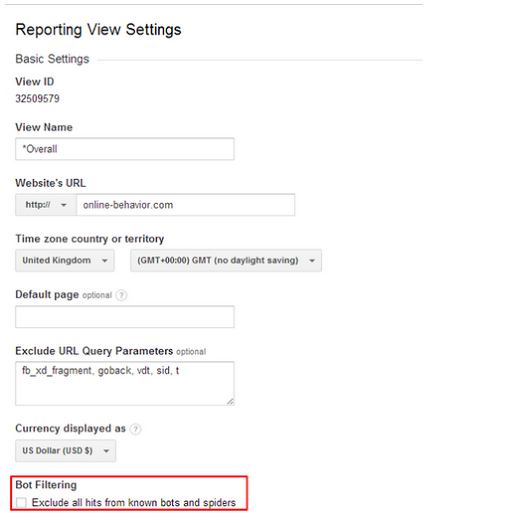

On July 30th, 2014, Google Analytics announced a new feature to automatically exclude bots and spiders from your data. In the view level of the admin area, you now have the option to check a box labeled “Exclude traffic from known bots and spiders”.

Most of the posts I’ve read on the topic are simply mirroring the announcement, and not really talking about why you want to check the box. Maybe a more interesting question would be why would you NOT want to? Still, for most people you’re going to want to ultimately check this box. I’ll tell you why, but also how to test it beforehand.

The Spider-Man Transformer is neither a bot nor a spider, nor a valid representation of my childhood.

What are Bots and Spiders?

The first thing to understand is just what a Bot or Spider is. They are basically automated computer programs, not people, that are hitting your website. They do it for various reasons.

Sometimes it’s a search engine looking to list your content on their site. Sometimes it’s a program looking to see if your blog has new content so they can let someone know in their news reader. Sometimes it’s a service that YOU have hired to make sure that your server is up, that it’s loading speed is normal, etc.

Some of the more basic bots don’t run code on your site, like the JavaScript that Google Analytics requires, so you don’t see them in your traffic reports. You will however, see them in your server logs if you have access to those. Many web hosts will charge by the hit on the server, based on their server logs.

Some sites, like ours, get tons of these hits that we only have evidence of on the server log level. All you people who have automated services pinging our servers looking for new posts every few seconds are essentially costing us money. It’s ok, we don’t mind. It doesn’t screw up our analytics data.

The Problem With Smart Bots

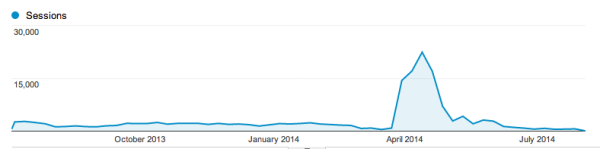

A stereotypical bot spike.

The problems start when you learn that some of these computer programs that are running automatically CAN run the Google Analytics code and WILL show up as a hit in Google Analytics. Sometimes a site will barely get touched by these “smart bots” and you won’t give them a second thought, as they won’t be visiting your site enough to have it skew your insights. Other times you’ll get wild and insane spikes in your data, which you’ll have to deal with.

Dealing with these bots can be a big problem. In the past, you’ve often had to hunt them down to discern them by the browser they’re listed on, the number of pages they hit and other behavior, etc. Once you figured this out you could filter out many of them going forward, but it would still remain in your historical data, and affect sampling.

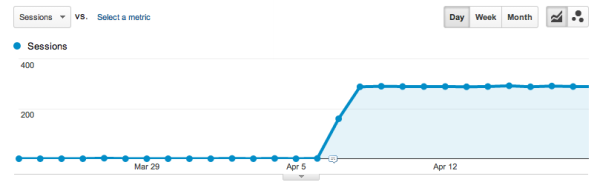

This is not a human generated traffic pattern.

Because it remains in your historical data, you’ll be forced to use segments to get rid of them when you look at your property, which will often cause sampling for large sites. Even worse, your total sessions will be affected by these bots, so even if you filter them out, you’ll trigger sampling faster in the interface, and it will sample at a much lower inaccurate sample size right out of the gate.

Even when you know about the bots AND deal with them, they can still make your life miserable if you’re an analyst.

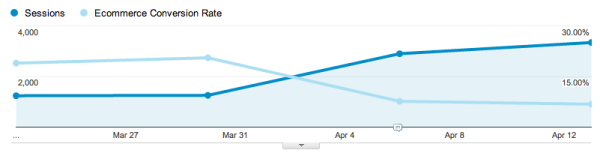

Goals and Conversions can be affected by a spike in segmented traffic.

The problems don’t even stop there, and it’s not just about traffic. For instance, some bots can even log into your site and pretend to be a specific audience segment. Many of these are ones in services YOU pay for, like Webmetrics.

If you don’t filter out these “super smart bots”, they’ll really mess up your data, because you might be looking at a specific audience segment, and see a wild swing in traffic or Ecommerce rate. Or worse the bots ramp up slowly, and you don’t even get a clear indication that something odd happened.

The New Bot and Spider Filtering Feature

Which brings us back to the Google Analytics new offering. This feature will automatically filter all spiders and bots on the IAB/ABC International Spiders & Bots List from your data. This is a list of spiders and bots that is continuously updated and compiled when people find new ones. Generally membership to see this list costs from $4,000 to $14,000 a year, but by checking the little box on your view (and on every view you want to filter them) you get to utilize the list for free. A number of bots and spiders may slip through the list, but usually not for long, and hopefully not long enough to affect your data.

You won’t be able to SEE the actual list they’re using, but you can exclude visits from bots on their list from showing up in your Analytics.

So great, right? Check that box? Not so fast.

Best Practices For Implementing New Filters

I am all for checking the box, but this is a great chance to talk about and implement best practices:

Step 1: Make sure you have an unfiltered view in your property that has zero filters, and which you don’t check the box. This way if there IS some sort of error, you’ll have your data in a pure state to go back and look at, and compare against.

Step 2: Don’t implement it immediately in your main view. We’ve heard reports from people having some problems, or even their Ecommerce being affected. I don’t know how accurate any of these complaints are, but it’s always good practice to put a new filter in a test view first. Create a new test view that mirrors your main one in every other respect, and then check the box.

Let it run for a week or two, and see what sort of difference you have. Investigate major differences and clarify internally what monitoring systems you’re paying for.

Step 3: If you’re happy with the new bot and spider exclusion filter based on this test, then go ahead and implement it in the main view.

I don’t know if this is going to solve all our smart bot and spider problems, but it’s a great start. As someone who recently had to manually exclude hundreds of different IP addresses from a view for a client, I can attest to a single checkbox being a humongous time saver.

So follow the best practices, and hopefully enjoy your cleaner data.