10 Critical Checks For Rebuilt Websites (Using Free Google Tools)

You did it. You built the perfect site with the best interface ever and more optimized content than your users know what to do with. And then you launched it. Awesome. Now what? If you don’t have a clear answer to that question, this post is for you.

We’ll go through the free tools and reports we used when LunaMetrics migrated to its new site, and discuss how it applies to your website. I’ll keep everything simple and write with the beginner or digitally-inexperienced in mind. If you need something a little more advanced, like custom reports, give Sean’s great post on auditing a migrated site a read here.

Getting Started

Your first stop will be Google Analytics. If you don’t have it, get it, because analyzing your web traffic is what all the cool kids are doing these days. If it hasn’t been set up already, you will miss out on valuable insights by not being able to see your old traffic data.

Read this article and work with your developer to get it set up on your website so you will be covered in the future. You can then skip down to the section on Google Search Console (formerly Google Webmaster Tools). If you have Google Analytics already, keep reading.

In this section, we’re going to go over basic reports in GA that will give you an idea of whether the migration went smoothly, then we’ll get into fixing any issues in the next section.

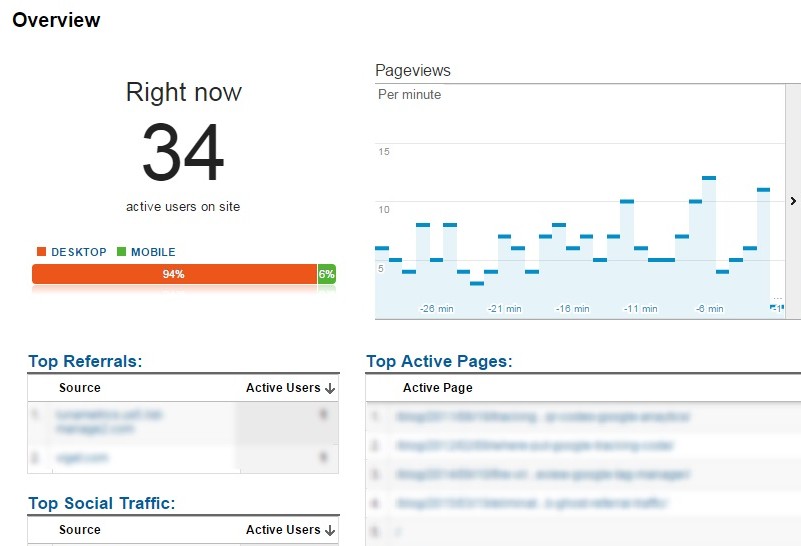

1. Real-Time Overview (GA)

The great thing about Google Analytics is that it can provide an overview of what’s happening almost immediately after launch. On the left-hand side of the interface, click on “Real-Time,” then head to “Overview.” This report will show you how many people are using your site and the pages they are visiting.

If you notice nothing when there should be activity on your site, you might have an issue with your tracking code and you should discuss this with your web developer. Use this report to quickly determine if anything has gone severely wrong, but don’t spend too much time on it.

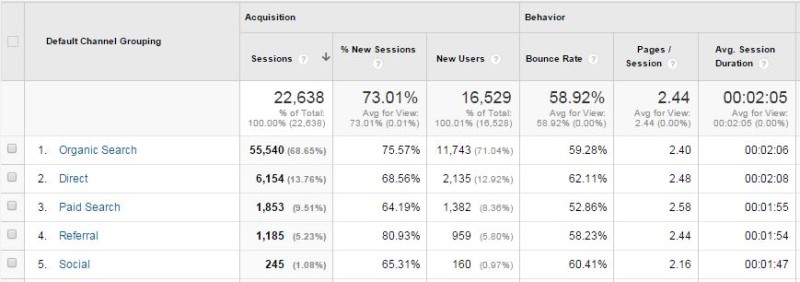

2. Channels (GA)

Once you’ve finished with the real-time overview, scroll down the left-hand menu and select “Acquisition.” Then click “Channels.” This gives a quick overview of how your different sources of traffic are performing. Here are some common ones you might see:

Organic Search: visitors who arrived via an organic (non-paid) search result

Paid Search: users who clicked on paid ads

Social: visitors who came from a social media link

Referral: visitors who clicked on a link outside of a search engine

Direct: people who typed your website directly into their browser or came by bookmark.

If you see steady numbers in everything, but notice that organic search traffic, indicated by “Sessions” and “New Users,” has tanked, there could be an issue with how your site is being indexed. Note any abnormalities, and keep them in mind as we move on.

3. All Pages & Landing Pages (GA)

Head to the “Behavior” drop-down, then click “Site Content” and “All Pages.” This will tell you which pages are receiving views and how long people are viewing them. The key in this step is not to get caught up in the URLs just yet. Next to the “Primary Dimension” line just below the graph, click “Page Title.”

Then, in the search box on the righthand side of the screen, type in the title of your error pages. If you don’t know what the titles are, they will generally be something along the lines of “Page Not Found.” This will show you which pages returned a 404 (not found) error. If there is a sudden spike in the amount of 404 pageviews, it indicates that some pages didn’t quite make the migration correctly.

While you’re here, you also want to look at the “Landing Pages” report. This is a list of all pages that someone landed on when they first visited your site. Take a look at all traffic and organic traffic before and after the redesign. If there is a large drop-off in traffic on an important landing page, make a note of that.

Moving On

Next, we’re going to pay a visit to Google Search Console, formerly known as Google Webmaster Tools, and start fixing the issues you may have noticed in your Google Analytics reports. We’ll also look for additional issues that require attention. For the uninitiated, Google Search Console is a free service that helps you understand how Google views your website and provides recommendations on how you can optimize it.

If you don’t have your website hooked up to Google Search Console, you should do that before we go any further. You’ll need a Google account to sign in. If you don’t have one, make one here: https://accounts.google.com/signup. Then, head over to: https://www.google.com/webmasters/tools/. Click on “add site” and follow their instructions to verify that you own the website. Be sure to add every site and subdomain that you manage.

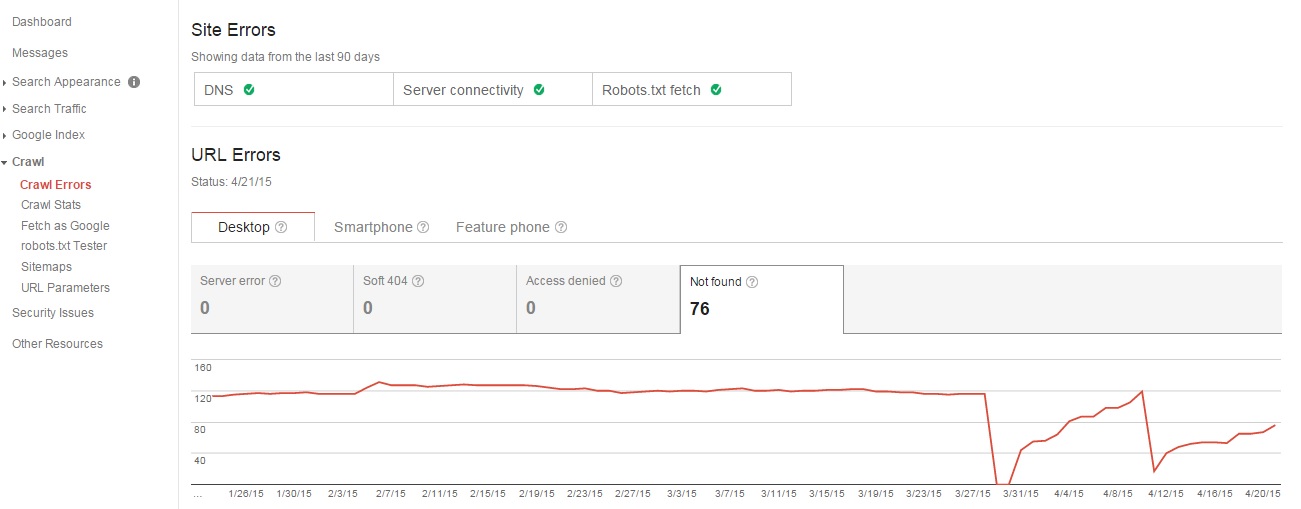

4. Crawl Errors & Crawl Stats (GSC)

Our first stop in GSC falls under the “Crawl” section, located on the lefthand side of the interface. Click the drop-down, then click on “Crawl Errors.” This section will tell you which pages Google could not access and which ones returned an HTTP error code. Ensuring that Google can see and index your website is the first step towards ensuring that your users will be able to find you.

Under “Site Errors,” you will see the issues that kept Googlebot, the tool Google uses to crawl new and updated pages and add to their search index, from accessing your site. There generally shouldn’t be any issues, but if there are, contact your web and/or server host to make sure that your server is running smoothly and that no security protocols are preventing Google from crawling your site.

Also check “Crawl Stats,” located under “Crawl Errors,” where you should see a spike in pages crawled and kilobytes downloaded right after the site launch. If you don’t, Googlebot may be having problems crawling your site.

What you want to concentrate on in “Crawl Errors” is “URL Errors.” These will tell you the specific pages that Googlebot had trouble accessing. Look for sudden spikes in the amount of “Not found” or “Blocked” pages. Remember that page report we looked at in Google Analytics? If you still have a large amount of important pages that are returning 404 (not found) response codes, call Houston, because you have a problem.

To fix this, either restore the content to the page or use permanent 301 redirects to tell Google that the content on that page has moved. At LunaMetrics, we downloaded every page with a 404 error and manually found the new URL we needed to redirect them to. You should be able to redirect yourself using your site’s content management system. However, keep in mind that many 404s might be false alarms or insignificant. If you’re unsure of how to prioritize 404s, check out #9 in Reid’s post on crawling indexation metrics.

Once you’ve determined which 404s you need to redirect, make sure you choose pages that are relevant to the old content. If, for example, you had a page about nerf guns (which we don’t have, but should) and redirected it to a higher-level page about toys because there is no longer a more relevant page on your site, you might see a “soft 404 error.” You often won’t get these, but if there is no page that you could realistically redirect to, it is better to return a 404 error.

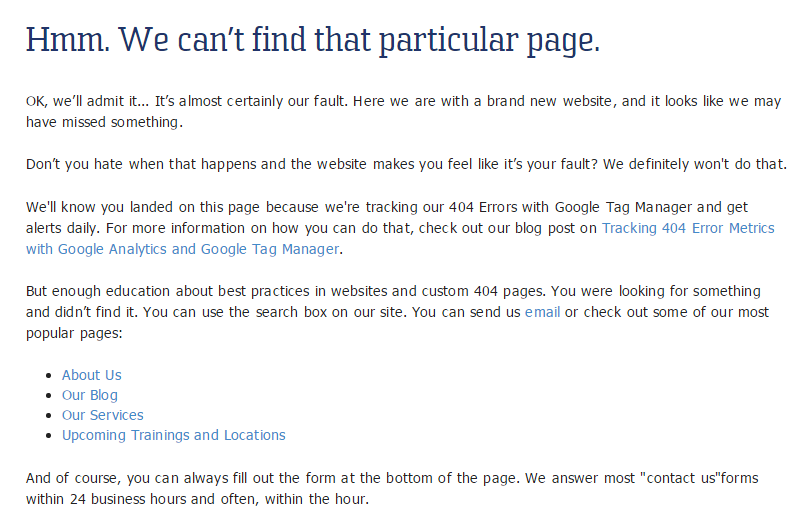

To improve user experience, you might display a custom 404 page with links to your most popular pages. Here is what we hit you with if you visit a non-existent page on the LunaMetrics site:

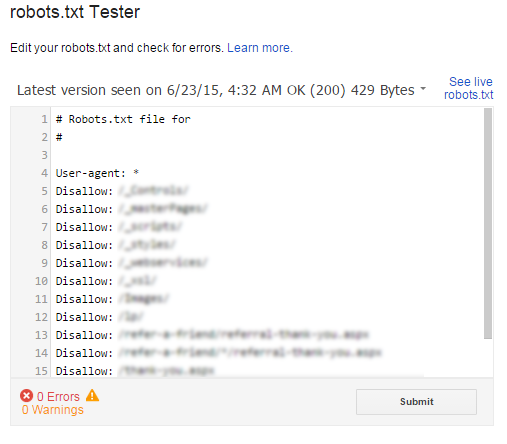

5. Robots.txt Tester (GSC)

So you’ve got your redirects sorted out, but you see an uptick in “blocked pages” under Crawl Errors. Now what? Well, you’ll want to check your robots.txt file, which tells Googlebot what it cannot access. This includes things like confirmation pages that you don’t want Google to index for search results. It is also where your sitemap lives.

It should not contain your “money” pages, such as your “About Us.” If your most important pages live in the robots.txt file, your users won’t be able to find them via search, and you’re gonna have a bad time.

Head down to the “robots.txt Tester” section under the “Crawl” header. Often, web developers will keep new pages in robots.txt so that they aren’t accidentally indexed while the site is under construction. Sometimes they will forget to remove some or all of the pages out of the file, so note any pages that don’t look like they should be there.

Before you do anything or if you’re not sure if a page belongs, ask your web designer for verification. When you get the go-ahead, remove the misplaced pages. Put any URLs that you removed from robots.txt into the URL tester to confirm that Googlebot can now access them.

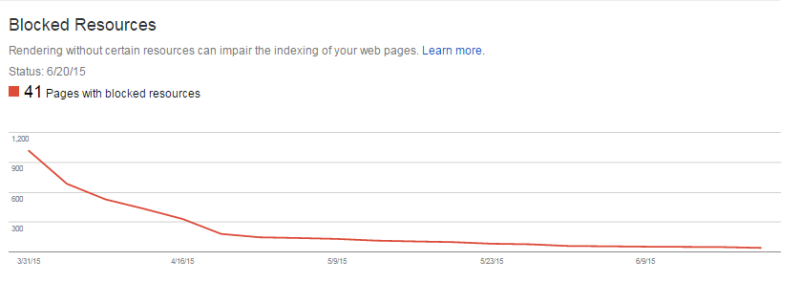

6. Blocked Resources & Fetch as Google (GSC)

Speaking of blocking stuff, you should also take a look at the “Blocked Resources” report under “Google Index.” This will show you which of the linked images, CSS, and JavaScript that make your website sexy are being blocked in robots.txt. If you see a spike after your launch, your developer may have left tags on your site that prevent Google from accessing these resources while the site was under construction.

Rendering without these resources can negatively impact how Googlebot sees your site. To determine what might be missing, use the Fetch as Google tool under “Crawl” to “fetch and render” your most important pages. If you get anything but a “complete” status and you see that the Googlebot view and the visitor view are far apart, you might want to discuss unblocking some resources with your web designer.

7. Sitemaps (GSC)

We just went over how to fix what’s called under-indexation, where pages and resources that needed to be indexed were buried in robots.txt. Now we want to look for over-indexation and index-bloat, where pages that should be in robots.txt are nowhere to be found. If this is ignored, it could diminish your “link juice,” which are the positive ranking factors passed on page to page via your links.

Look at the “Sitemaps” report under “Crawl” and check out the number of items submitted vs. the number of items indexed. If there are more pages indexed than those you are counting on to be your search engine landing pages, call your web developer and work with them to determine what is missing.

8. PageSpeed Insights (Google Developers)

After you’ve got your blocked resources sorted out, take a look at your page speed here. Page speed is an important part of the user experience, and can have an effect on where your site falls in the search results. Test your most important pages to see where you fall. If there are small errors here or there, you should still be okay. However, if you notice anything really out of the ordinary, contact your web designer for some help in optimizing your pages. And if your mobile score in particular is not looking so hot, keep reading.

9. Mobile Usability Tests (PageSpeed Insights, GSC, & Google Developers)

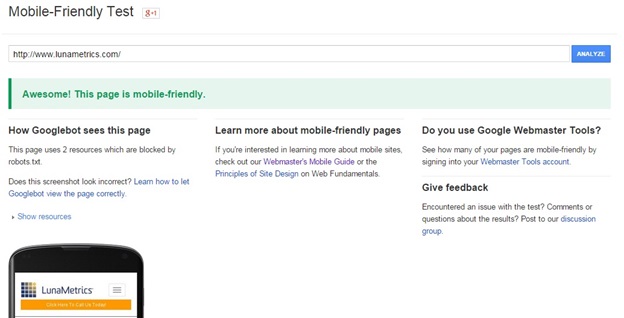

Because of the mobile-friendly algorithm update Google launched on April 21st, everyone spent the past quarter making sure the mobile version of their site was ready for the big rollout so that they didn’t get penalized in search results.

As our small case study indicated, the update didn’t totally smash websites as as per the doom-and-gloom forecasts, but your best bet is to make sure your site passes. If you don’t have a mobile version of your site, you should fix that yesterday. With more searches than ever coming from mobile devices, you can’t afford to miss out on that traffic.

There are three different tests you can use to check if your site is mobile-friendly. The first is the PageSpeed Insights report we just talked about, but there is also a “Mobile Usability” test in Google Search Console under “Google Index,” as well as a Mobile-Friendly Test from Google Developers.

Because of discrepancies in how PageSpeed Insights and the GSC Mobile Usability test run, you might get contradictory results. The best way is to use the Google Developers test, although if it finds any errors, it might suggest that there is a problem with the robots.txt file and send you to the PageSpeed Insights test, which could give you a different answer and create more confusion. You might also get differing results on second and third run-throughs. If you want to get a better idea of why these tests are flawed in this way, read this post. BUT, we would still recommend that you get passing marks on these tests.

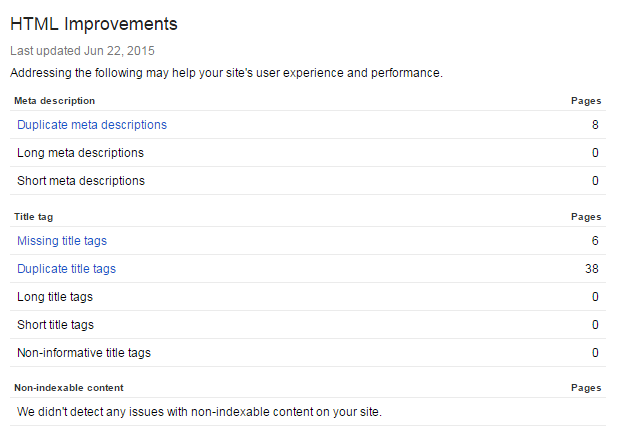

10. HTML Improvements (GWT)

Now that we’ve cleared all that up, we need to take a look at the “HTML Improvements” report under “Search Appearance,” where we will see how your meta descriptions and title tags are doing.

Your meta descriptions are little summaries that let the user know what your page is about and why they should click. They do not affect search rankings.

Your title tags on the other hand define the title of a page and let the search engine know what it’s about. Title tags are one of the most important on-page aspects of SEO and play a factor in search results. Don’t neglect either.

In this report, you will see which pages have duplicate, missing, long/short, or non-informative meta descriptions and title tags. To fix these issues, go through and create unique, concise, and informative ones for each page. There will be some exceptions as to which pages need unique ones, such as pages that list one author’s blog posts, but in general, each page should get its own.

We recommend keeping track of what you will be changing by putting this report into an Excel document. For best practices, read these posts on title tags and meta descriptions. Also, don’t slack on this as you continue to add new content, otherwise you’ll eventually have to do this whole process again.

To Infinity and Beyond

As you finish up your checks and fix any post-launch issues, keep monitoring Google Analytics and Google Webmaster Tools periodically to analyze your traffic for abnormalities. SEO is a continuous process and you always want to make sure you strive to provide a good user experience. I (biasedly) recommend our blog for insights into how to keep your data clean and other power tips for analyzing your data. Reid’s post on using Webmaster Tools (now Google Search Console) for SEO was the inspiration for this post, and is a great resource for your non-immediate post-launch needs. And as I mentioned earlier, Sean recently came out with a great post on more advanced concepts to keep in mind when auditing a site migration.

Good luck, and may the traffic be with you!