Notes On Filtering Spam And Bots From Google Analytics

A couple of months ago we wrote an April Fool’s Day post about eliminating bots and spam from Google Analytics. Given the particular climate of the Google Analytics industry, the collective anger over bots and spam, and the craziness of some of the solutions offered, we expected it to get a few chuckles and the internet would move on. (Seriously, go read it. I’ll wait…)

We never expected that over eight months later that it would still be a top-ranked post in the search engines and we’d continue to get comments and emails about it. The topic had and continues to be written about so extensively that we hoped we wouldn’t need to toss another blog on the pile.

Yet here we are, the spam and the questions continue, and I thought it might be helpful to jump in and make some clarifications. Add this to the growing collection that will hopefully be rendered pointless by an oft-cited quote about Google’s future solution to the problem.

Let’s cover the basics!

Why Do They Do It?

I see this question often. Why do people purposefully corrupt your data and send in seemingly random websites to your traffic? Think about all the other ways that people can affect data and think about their motivations. Why do people create viruses, why do people hack computers, why do people send spam emails?

Please oh please, do not click any links that don’t belong there. It’d be shocking if a website that looks like Huffington Post (but isn’t exactly spelled correctly) linked to YOUR website, the one that wasn’t even published! Resist the urge, if it looks suspicious, it probably is.

Some people create spam for fun, some people create spam to make money. Perhaps the sites contain advertisements, spyware, malware, viruses…just don’t click it!

How Does It Get There?

There are several different types of bots, or non-human traffic, to your website. There are good bots and bad bots, ghost bots, zombie bots, robot crawlers, spiders, the works.

Good Bots

Systematic crawling of websites can be a good thing. Search engines crawl your website to find new content, check advertising links, monitor uptime, etc. The best of the good bots register themselves with the IAB/ABC International Spiders & Bots List. We can easily filter these bots out by checking a box in our View Settings.

Bad Bots – No Visit

These bots are all En Vogue now. No matter what tracking code you have on your website, the new Universal Analytics processing allows traffic to come from any environment with internet access. This is great for connecting transactions that occur offline, connecting to non-website environments, and other very legitimate reasons. This is bad because, like most good things, it can be abused.

Think of your personal Google Analytics tracking ID like an email address. You send data to your specific address, GA collects it and puts it in your reports. Anyone with an email address knows about spam emails though. No, it doesn’t mean someone hacked you or chose you specifically. More than likely, and just like with email addresses, someone cycled through GA property IDs until they stumbled upon yours.

This traffic is annoying, but it’s the easiest to filter out. So many blog posts have been written about blocking the bad traffic by blocking the sites they come from. But those sites change every day, and constant maintenance is time-consuming, frustrating, and error-prone.

Better idea. Tell Google Analytics what is good traffic and only accept that.

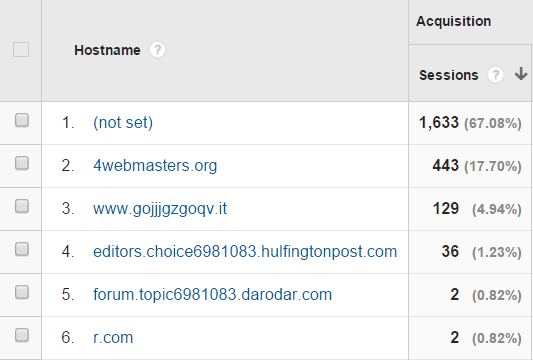

The hostname dimension tells you what was the hostname of the page the user was on when they sent traffic to GA. As a reminder, the hostname is the part after the protocol (http://) and before the port or path (:8080). If the traffic is coming from someone on your website, the hostname will be your website name. www.example.com.

Check your Network report in Google Analytics to see all of the hostnames that are sending traffic to your site. You should recognize most of them. But you’ll probably also see (not set). This tells you that someone sent traffic to your Google Analytics without filling in that particular dimension. Boom. Not real traffic.

You need to take ownership of this step and truly understand it. On what websites have you placed tracking code? Which websites are good on this list? You may have Dev sites, subdomains, or multiple domains that all track into the same property. That’s fine, but make sure your hostname filter adequately includes all of the good traffic.

Follow best practices, have an Unfiltered View, and test this before applying it to your main view!

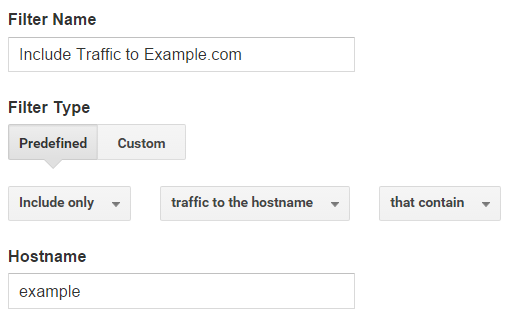

If you only have one website or all of your websites fall on the same top-level domain, then you can use a simple pre-built filter and just fill in your name.

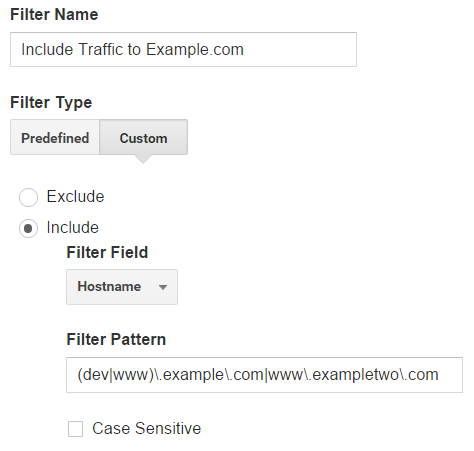

If you want to get more specific and list out all of the hostnames that you really care about, you should use a custom Include filter, with the hostname filter field, and a regular expression of your sites.

Note: I’ve seen some blogs mention traffic from Google Translate or archive.org. That part’s up to you! Bottom line – do you care about that traffic? Does it contribute a significant portion to your overall traffic, will you ever use it to make a decision?

Some of the smarter, non-website-visiting bad bots may still try to spoof your hostname and pretend like they visited your website. We’ve got a solution for that here as well, basically, tracking something else into Google Analytics that could only come from your website.

Bad Bots – With a Visit

Ok, now we’ve got a tough one. If someone is actively trying to impersonate a human coming to your website and imitate traffic, they’re going to be harder to filter out. The hostname will show your actual website because your website was actually loaded and Google Analytics actually loaded.

I don’t have a great answer here – there have been a number of tools that have been created to try to stop this. We’re back to the "trying to block bad traffic" problem. You continue to try to maintain these blocklists, you can try tools that are out there, you can try paying others to keep these lists up to date, but know that’s it much easier for them to create spam websites than it will be for you to keep up with them.

I’m not going to give you a list because it will be out of date by tomorrow. There are plenty out there and the regular expressions are long enough to require multiple filters.

Filters Vs. Advanced Segments

Here’s a fun debate that really just gets to the heart of understanding these two tools. I see different suggestions all the time about how to “fix” the spam problem, and they often use one or both of these options.

However, they do different things and serve different goals. Filters are a fix, Advanced Segments are a band-aid.

Filters – Future

Filters change your data. They include or exclude data, or change the way it looks. This happens during the processing stage of Google Analytics and then the final results are saved in your reports forever. Standard reports are unsampled by default.

Advanced Segments – Dynamic

Advanced Segments will change the data that is included in the particular report you’re viewing. This means you can go back historically and look at a specific portion of traffic, or block out a specific portion of the traffic in our case. You can use an Advanced Segment to help with reporting, but know that any time you ask GA to reprocess a report, you run the risk of hitting sampling.

A Few Additional Notes

There have been some wacky ideas thrown out there and some of them are downright destructive.

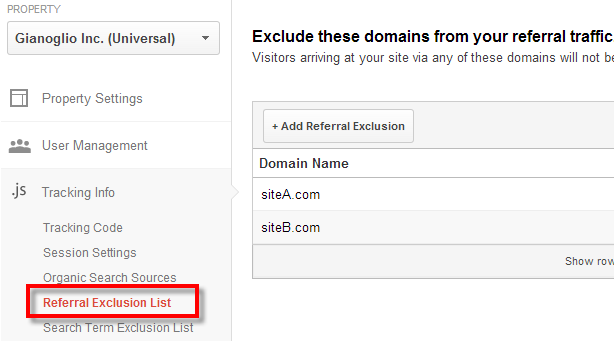

Referral Exclusion List

Do not, do not, do not add spam sites to your referral exclusion list! The referral exclusion list is used to help better attribute traffic that is coming to your site.

Consider a site that has an external payment that’s processed on PayPal.com. In order to keep our attribution intact, we’d set the Referral Exclusion list along with our cross-domain tracking. We would add PayPal.com to our referral exclusion list, which will strip off the referral from that session. This turns the session into a Direct session, which means if the client ID is still in place, it will continue the previous session or at the least, maintain the previous session’s source/medium – giving credit to the correct website.

As you can see, this has nothing to do with spam. Adding spam sites will not remove them from your data, rather it will turn the session into a Direct/None session. The traffic is still there, it’s just now completely disguised as a legitimate session. You won’t be able to use Advanced Segments and worse, it will actually look like your site is doing better!

.htaccess

The .htaccess solution usually involves blocking known offenders from ever accessing your site. The risk is a bit higher because you’re actually blocking access to your site. If done correctly, the reward is higher – if they never even load your site, you use fewer resources and no traffic gets sent to GA.

Of course, this assumes we’re only talking about the bad bots that visit your site. The .htaccess solution will do nothing for the referral spam that never loads your site.

Conclusion

As long as there is the internet – spam will continue to be a problem. Think of junk mail, spam emails, robocalls, and now Google Analytics spam. I predict it will get better with time, so hang in there. The problem affects lower traffic websites more than large websites, as the spam will make up a larger percentage. Start with best practices and then you can try some of the crazier tools/solutions.