Using Unsupervised Learning to Enhance Image Recognition

Image recognition is a major area of Artificial Intelligence (AI) research. It’s what allows self-driving cars to navigate, lets Apple’s FaceID to unlock your phone, and enables Facebook to automatically suggest tags for friends in your photos. Almost all of these systems are trained on tens of thousands, even millions, of example images. These allow neural networks to decide if a picture contains a person, a dog, both, neither, or something else entirely.

If you’re not Google or Microsoft, you probably don’t have access to millions of example images. You might have some success training a neural network on a small number of images, but chances are you will run into issues where the recognition fails due to inconsistent lighting conditions or difficulty recognizing the same objects at different scales or orientations.

One solution to this problem is to use transfer learning. Popular image recognition networks like Resnet or VGG are great for this, and can be downloaded in pre-trained form which saves time and effort. Resnet can already recognize 1,000 different classes of objects. In the process of learning to recognize those 1,000 classes, Resnet has learned to identify thousands of features that might be present in those objects. A common technique is to use those features as a starting point for detecting your own objects. People have found that this strategy leads to quicker and more robust results, and can work even when starting with a small image training set.

Another solution is data augmentation. For images, this means taking your existing training examples and applying different image transformations like rotation, scaling, color adjustments, and more. Logically, a picture of a fish should still be classified as a fish even if it’s rotated 45 degrees, so applying data augmentation can help create more training examples, and help make your algorithms more robust and tolerant to these variations.

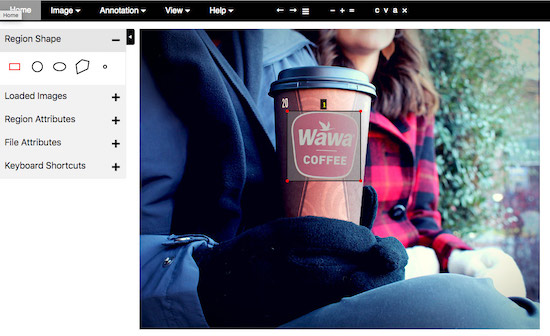

As an experiment, we wanted to see if we could detect a specific logo and provide a pixel-level map of squares that contain it. Instead of using a pre-trained network, we utilized “unsupervised” training data consisting of random images that have not been hand-labeled by a person. By training our algorithm first on unlabeled images, we hoped to achieve greater accuracy than we would get by training only with our small data set. Our approach still uses data augmentation at every step, which further boosts accuracy. By using unsupervised learning, the network first gets good at recognizing bits of images and differentiating them from each other. Then we take those learnings and apply them to our problem of recognizing logos. Other researchers have found that they can achieve state of the art performance by utilizing unsupervised learning as the first step in training.

For our dataset, we used 15 photos of coffee cups with logos on them, and a few random shots taken in the office. I also created black and white mask images to indicate which areas contained the logo. We also had a collection of several hundred random images scraped from the Internet that we used as unsupervised data.

We conducted this experiment using Pytorch, a deep learning framework from Facebook that’s similar to Google’s Tensorflow. It gives you the building blocks for creating almost any type of neural network, and can leverage GPUs to speed up training.

Our model works similarly to the YOLO architecture. In the first stage, it breaks the image into component blocks and considers each block separately, producing a series of numbers that identifies the contents of that block. This stage is trained in an unsupervised fashion on un-labeled images. We feed in several thousand blocks of random images and train the network to identify each individually. At this stage, we are able to identify individual image blocks with 90-95 percent accuracy. The goal here is not identifying the random images, but training the network to produce a series of numbers that can uniquely identify a piece of an image.

The second stage takes the results from stage one, and classifies each block component as either containing a logo or not containing a logo. In this stage, we use our labeled training data to determine if we are looking at a logo or some other random image. Since the algorithm looks at a small region of blocks at once, it can learn that logos usually appear on cups and near hands holding cups or under lids.

The early results are promising. We are able to process an image in a fraction of a second, and are getting usable results even with our extremely small training set. There’s still much work to be done before a system like this could be used in production. We would need to spend some time increasing the size of our training set to increase accuracy. The training set would also benefit from more images of cups in different environments and lighting conditions, and at different distances from the camera.

We could also optimize the processing speed so the algorithm can run in real-time on a cell phone or webcam. Fast image detection has many potential applications in augmented reality, digital assistants, robotics, and more.