Creating and Publishing a Machine Learning Model in Adobe Experience Platform

If you have ever been exposed to the website analytics or digital marketing world, you are probably familiar with Adobe Analytics, Adobe Campaign, or Adobe Target. Now there is a new Adobe product at the forefront of emerging Customer Data Platforms—Adobe Experience Platform (AEP).

Adobe describes AEP as:

"The foundation of Experience Cloud products... an open system that transforms all your data —Adobe and non-Adobe — into robust customer profiles that update in real-time and uses AI-driven insights to help you to deliver the right experiences across every channel," (Adobe Office Website).

This tool is a platform that provides services for data warehousing, building and deploying advanced machine learning models and, perhaps most importantly, deploying insights and results back to the business world.

To discover this product’s true capabilities, we completed a proof of concept (POC) project that leverages AEP to create and publish a machine learning model. In the POC, we ingested Salesforce data and built a model that predicted an opportunity’s probability to convert into a sale. We chose this use case based on our experience working with many of our clients in the B2B space, who often have longer sales cycles consisting of multiple digital and personal interactions.

We will walk you through our entire process as we explored AEP from an Advanced Analytics' perspective. We will discuss challenges and surprises we encountered, and provide learnings and tips for using this product.

The Three Main Stages

To make things simpler, let’s break down this process into three main stages: Discover, Build, and Activate. This will make it possible to select the most impactful and feasible solution for your business.

Discover

This stage aims to gain a solid understanding of both business goals and data. It requires strong communication between the domain experts and data experts, and good collaboration with clients. For any model to be useful, we must remain constantly aware of the business context that we start and end with, and identify an activation plan upfront.

Build

At this stage, the Advanced Analytics team will be totally focused on all the technical pieces. The goal is to create contextually meaningful features for a contextually meaningful, high-performing model.

Activate

The goal of this stage is to act on the model and create a measurable impact. It’s also a collaborative phase that requires the input of all teams. Some sample outcomes may be an increase in click-through rate, revenue, or new site visitors, or increased efficiency measures through internal processes or site dwell time.

The Activation plans may vary, but we will explain what we did for this POC project and will walk you through how we interacted with AEP at each stage.

Discover

A typical B2B company may use common tech products, like Salesforce, to help start and track conversations with multiple companies at a time, often called "Leads." Conversations can start from a variety of channels, such as events, conferences, referrals, websites, and community outreach. These are then recorded as "Leads" in Salesforce and ultimately transformed into "Opportunities" if there’s a good fit to work together.

Several of our teams helped develop the business objective for this project: Advanced Analytics, Marketing, Experience Practice Development (XPD), and Data Platforms. Together we determined the business objective was to help business development teams vet opportunities by using Salesforce (SFSC) data to predict how likely an opportunity will become a sale.

Tip: Having direct access to your domain experts can make or break a project if you're not already highly familiar with the data yourself.

The next step we took was to meet with a marketing specialist who knows Salesforce data very well. After the high-level discovery of various data sources, we picked a few SFSC objects we thought would be relevant and worked with our data engineers and AEP SMEs to import those data sources into AEP.

Importing data into AEP is a two-step process: Defining schemas and ingesting Datasets. The first phase is schema composition. The schema needs to follow the requirements of the Experience Data Model (XDM) System, a system designed by Adobe that standardizes customer experience data and defines schemas for customer experience management.

Adobe’s XDM has predefined schemas with specific column names and data types for all the customer experience data, making it easier to utilize the final results in other Adobe products. However, this creates some challenges for importing external data since these sources must follow the same XDM schema rules. You can follow our guide on best practices for designing schemas in AEP to overcome the challenges.

After the schema is designed and pre-developed, you can move into ingesting your 'Datasets'. Besides all the data from Adobe tools, AEP does have a lot of useful connectors already built that can connect the platform with other data sources. For more information about Data Ingestion, check out this Adobe help page.

After importing those tables, we explored the data in more detail using SQL and Python. We also had another working session with the specialist to answer additional data quality and clarification questions to help prepare the Advanced Analytics team to move into the next stage.

Tip: We recommend that an AEP SME lead the discovery phase to ensure the projects align with AEP standards and best practices.

Build

One of the most significant AEP capabilities is "recipe" creation. A "recipe" in AEP is a top-level container that holds the specific machine learning/AI algorithm or ensemble of algorithms as well as the processing logic and configuration needed to execute the trained model (source). More simply put, a recipe provides a Jupyter Notebook interface and modularizes that code for easy deployment into production.

We discovered that it’s difficult to explore different features, models, and tuning parameters within a pre-canned recipe. We highly recommend that Data Scientists build and evaluate the model within a regular notebook before publishing it into a recipe. All of our one-time model development was done using 'JupyterLab' within Notebooks. It works the same as a regular python notebook.

Tip: The notebook you create cannot be shared across users on the platform, nor is there a Github integration. To share your work, you need to manually download the notebook from the platform. This lack of version control is a common issue in many machine learning Platforms

There are many ways you can load the data into an Adobe JupyterLab Notebook. After exploring all the different options, for example, you can work directly in the query service and save off a dataset and read into your notebook, or you can load the dataset directly with python with DatasetReader.

We recommend using a query service to load your data from the Datasets. This way is the most straightforward with the fewest limitations. It also gives you the ability to pre-process the data in SQL.

With the knowledge about the data that we gained from the Marketing team, we engineered and selected a set of features that are fed into different predictive models with fine-tuning of the hyperparameters to calculate the likelihood that an opportunity will become a sale.

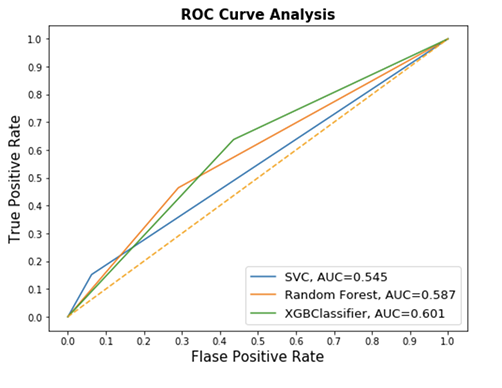

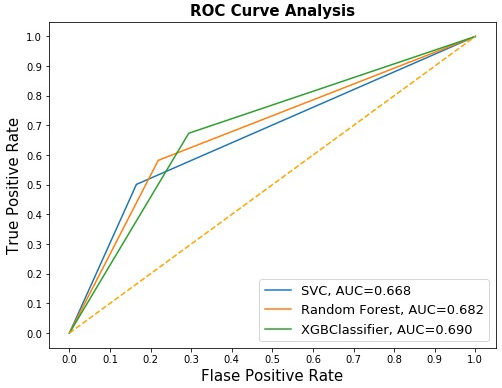

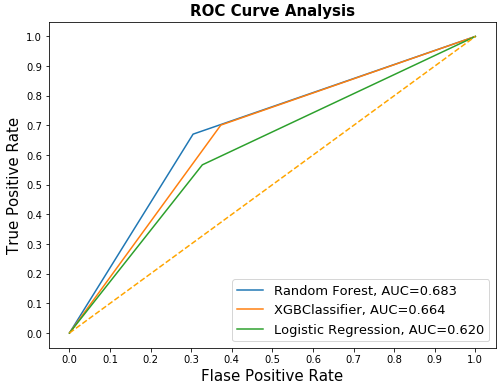

To evaluate results we used a Receiver Operating Characteristic (ROC) curve, which is a common graphic plot that measures the performance of various classification models to help data scientists select the best model fit. When evaluating ROC curves, we want the line to get as close as the top-left corner as possible. In the other words, we want to maximize the area under the curve (AUC). That means our model correctly classifies all the positive and negative class points. The article "AUC-ROC Curve in Machine Learning Clearly Explained," helps explains the concept.

Comparing the resulting ROC Analyses for our three modeling iterations (shown below), we saw the biggest increase in performance came with enhanced feature engineering. No matter how many models we tried and how much hyperparameter tuning we did, the biggest performance improvement driver was having meaningful, carefully selected features.

The fact that the biggest value-add depended on human-informed feature selection indicates that Data Scientists still need to be a part of the process and that we’re not yet able to rely on a fully automatic machine learning model.

Resulting Roc Analyses for All Three Proof of Concept Iterations

First Iteration

Second iteration with additional feature engineering

Third iteration with hyperparameter tuning

The fact that the biggest value-add depended on human-informed feature selection indicates that Data Scientists still need to be a part of the process and that we’re not yet able to rely on a fully automatic machine learning model.

Activate

After we determined and selected the most robust feature set and the best-performing model with optimized hyperparameters, we moved into the recipe building and placed it into 'Service' where retraining and re-scoring could be scheduled.

Using the AEP 'Recipe Builder' as our template, we moved our one-time model from the Notebook to this builder. Check out Adobe’s video "Using Data Science Workspace to build and deploy a Model," to help you understand the entire process. This video example does not use the most updated version of AEP, but it gives you a nice overview of this process.

In addition to the notes the builder already includes, her are a few useful observations and tips:

- Before building the recipe, you need to prepare three datasets: The training set (which includes both the training and testing datasets), the scoring set, and the scoring results set (just an empty table with schema works well)

- Make sure you do not put any comments within the two configuration files and make sure the indentation is perfect

- In the 'evaluator' cell, you have to follow the specified format for defining the "metrics" object. You can pick any type of performance metric you want, but all the results need to be stored in the “metrics” object as illustrated below.

- Cells are not run in order; instead, the order of the training steps are:

trainingdataloader.py(load and data preparation)split()withinevaluator.pyto split the training set into train and test datasetstrain()withinpipeline.pyto train the modelevaluate()withinevaluator.pyto evaluate the model performance on the testing dataset

The 'Train' and 'Score' buttons will help you validate all of the code and make sure everything is running smoothly. The 'Create Recipe' button will turn the code into a recipe that will show up within the 'Model' section, under 'Recipes'.

After the recipe is built, there are a few steps to get to the fully productionized pipeline.

Step 1: First, we deploy the recipe to a model. This step requires you once again to train and evaluate the model, and then score the model. We found this step a little confusing because it gives users the option to change the hyperparameters and review the model performance, whereas Data Scientists usually set the parameters earlier during the initial model development in a notebook.

Step 2: Once the model is deployed, you can publish a Model as a Service in the UI to allow end-users to score the data by providing easy access to a machine learning service without touching the code.

Step 3: This service can also be scheduled for automated training and scoring runs to work with new training and scoring datasets. Scoring can be daily or, in theory, real-time. Retraining should be considered on a case by case basis for model maintenance. This is an important aspect because if the training set is refreshed and the model is constantly being retrained, this is reminiscent of the idea of reinforcement learning.

Tip: Reinforcement learning is when a model gets immediate feedback from its mistakes and successes and continuously learns and improves based on its past performance. Scheduling a model to be re-trained on some cadence is not technically reinforcement learning, but it’s important to refresh your model frequently so that it can adapt to changing environments.

Step 4: AEP also makes it easy to update models by publishing new recipes that can be seamlessly swapped out in production. For example, we are already planning the second iteration of our model, and we will include Google Analytics data to capture web activity of these opportunities to be scored.

Closing the Loop and Sharing What We Learned

With any project like this one, the success relies not just on the process working correctly, but on the business value that can be created. After determining the likelihood of each opportunity becoming a new customer, we were able to feed these results straight into Salesforce.

From here, we hosted education sessions with the marketing and business development teams where we talked through the results, working together with the marketing team to make changes to reporting to surface and distribute the information, and talking through use cases and ways to activate on this information.

By closing the loop from a technical standpoint, we have the first round of results that will be analyzed and improved on. The model can continue to run, ingest information, and calculate probabilities. Through touchpoints with the stakeholders, we’re able to validate how accurate and helpful these results are, and can use that information to further refine the model and the inputs as needed.

Adobe Experience Platform - Helping Make Meaningful Impact with Data

Overall, AEP is a solid CDP to consider if your company is ready to make use of your siloed marketing data across multiple channels. In this example, by combining online behavior data with offline demographic data to create audience segments, we’re able to set up an automated system to target visitors on the site with the proper messaging based on their propensity to purchase.

However, we discovered through our POC experience that like many new products as they first hit the market, there are limited up-to-date resources available online. So, if you’re jumping into AEP on your own, even if you are a Data Scientist, you should be ready for a substantial learning curve. We hope this blog provides some guidance for this as you explore the Data Science Workspace in AEP.

And regardless of platform, we hope that following the problem-solving steps described here from Discovery through Activation will empower you to go beyond out-of-the-box methods and develop meaningful models with meaningful impact.