Salesforce Overview: Bulk API 2.0

Salesforce has released a new version Bulk API 2.0 which makes it easier for data to be processed and synced within the Salesforce CRM. Salesforce Bulk API 2.0 comes with two versions to support data migration or data upload.

Bulk API is a custom REST API and Bulk API 2.0 is a standard REST API. The latter allows users to work with large data sets asynchronously, meaning it processes requests in the background without the need to wait for the task to finish.

When a query request is initiated, the Bulk API automatically divides the complete query result into batches and sends back each batch. This process helps avoid timeouts and provides teams with more time to process the current batch before the next batch is ready.

When To Use Bulk API 2.0

Bulk API 2.0 is used in Salesforce when there is a need to process a large amount of data. When data processing involves more than 2,000 records this is an ideal use case for using Bulk API 2.0. If records are fewer than 2,000, it requires “bulkified” synchronous calls in REST (for example, Composite) or SOAP.

Bulk API 2.0 simplifies the process to insert, update, upsert, and delete Salesforce data asynchronously using the Bulk framework. When compared to its predecessor, Bulk API, Bulk API 2.0 is more streamlined and easier to use.

Differences Between Bulk API and Bulk API 2.0

Bulk API 2.0 uses a different limit mechanism to process the data. With this limit mechanismBulk API 2.0 is considered best for optimizing API consumption for large objects.

| Bulk API | Bulk API 2.0 |

|---|---|

| Limits on the number of records, file size, and batch. | Limits are based on the maximum size of query results (in GB) per backup and on the daily limit in 24 hrs. |

| The batch needs to be created manually | No need to create a batch. The system will do this internally. |

| Built on a custom REST framework so not similar to other REST API Integration | Built on standard REST API so similar to other REST API integration |

| Performs parallel and serial processing | Performs parallel processing |

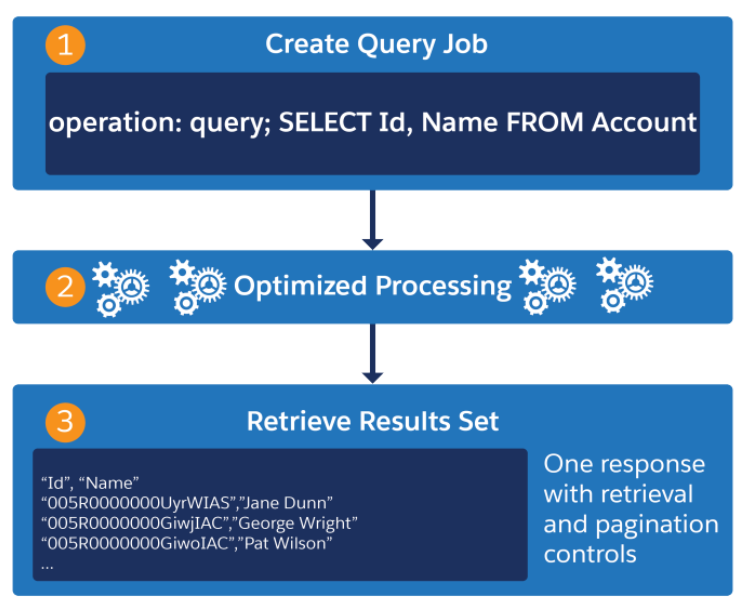

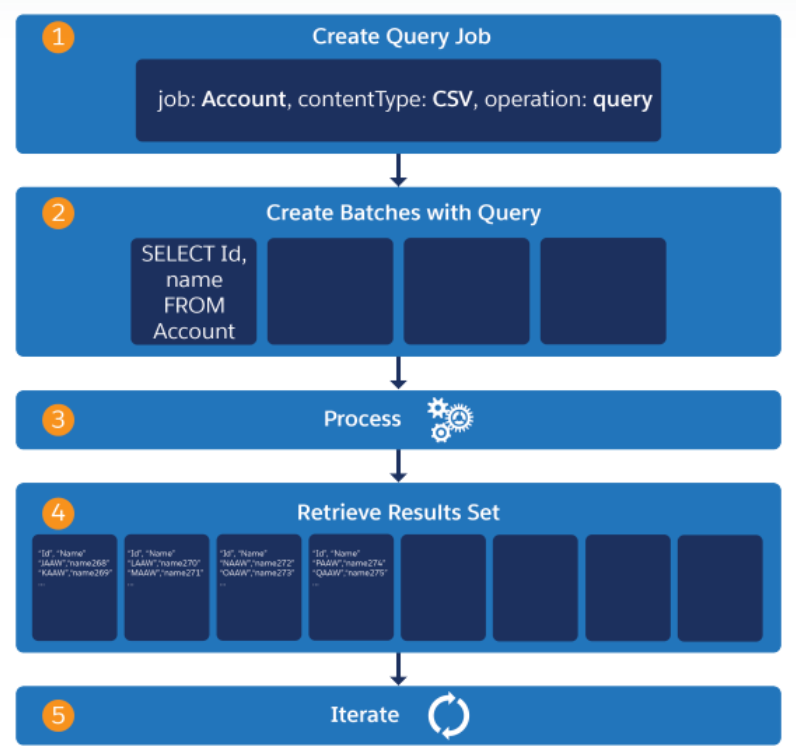

Here's how the Bulk API 2.0 and Bulk API query workflow works:

| Bulk API 2.0 | Bulk API |

|---|---|

|

|

Bulk API's query workflow is more complex, requiring the creation of batches and iterating through the retrieval of result sets. Though Bulk API 2.0 is a newer version and seems a clearer choice, both are still available for use with their own features and limits. It is always a user’s preference to choose the one that best fits their use case.

How Bulk Jobs Requests Are Processed

When a job is created in Bulk API 2.0, it returns records based on the specified query. It also specifies which object (Account or Opportunity) and which action (insert, upsert, update, or delete) is processed. Salesforce will automatically optimize your job before processing and the job will be completed without timeouts or failures. You can navigate to Setup > Bulk Data Load Jobs to check the status of your job. You can abort this job at any time in the process; however, you cannot roll back the already completed batches.

Use Cases Of Bulk API 2.0

Consolidating Data from Two Orgs into One: Bulk API 2.0 can be used to upload data during corporate acquisitions when one company acquires another and there is a need to merge two different instances of Salesforce into one.

Here is an example of how this works.

Let’s say a corporate company, ADC Healthcare has acquired another company, Atlas Healthcare. They both use Salesforce as their CRM and decided to integrate and combine their systems. After this consolidation of Salesforce instances, the goal is to have one instance that is a single source of truth as their surviving organization.

The retiring organization has a lot of objects, profiles, record types, picklists, and other important customizations that need to be merged into the surviving organization. It is decided to use BULK API 2.0 to merge the large volume of data. SBulk API 2.0 can upload up to 150,000,000 records per 24-hour rolling period. It can process up to 15,000 batches and each batch can have 10000 records. A job is created, and the batches are processed in chunks to insert, update, upsert, or delete records asynchronously, meaning that they can just submit a request and look for the results later. They can track the job status of current and recently completed bulk data load jobs that are in progress or recently completed jobs by typing Bulk Data Load Jobs in the Quick Find box.

Migrating Data from One to Another Organization: When you need to transfer data from one organization to another, you are dealing with a large data set considering the size, format, and accuracy of that source data.

For example, let’s say a company called Blue Lights has merged with Blooms and wants to migrate its large loads of data from the source organization to the target organization. Here, the source organization is the Blue Lights company, and the target organization is the Blooms company. The source organization has large amounts of records, business processes, and other customization that need to be migrated to the target. The DataLoader or SOQL query cannot help them in migrating the large volume of data. Because the DataLoader is time-consuming and using SOQL queries from the apex may throw errors when exceeding the maximum allowed offset value of 2,000.

In this scenario, the company needs a tool that keeps extracts under the governor limits and is stable and efficient. Salesforce BULK API 2.0 is the best choice for efficient Salesforce data extraction and migration. Unlike a normal query in Salesforce, when a BULK API query is submitted to a queue, the entire query is divided into chunks and sends each chunk back. This way it processes the current chunk before the next chunk comes in. Thus, BULK API optimizes resource usage and retrieves a large set of data with fewer API calls.

Periodic Large Volume Data Push in Salesforce Integration: While you work to implement Salesforce, you often need to integrate additional applications. Although each integration scenario is unique, a few applications may have their data updated periodically, which needs to be updated in the Salesforce organization in which they are integrated.

Let’s consider Company A integrates a Homegrown System application to their Salesforce Org based on their business needs. The Homegrown System application is used to store pricing and discount data and updates with the latest data once a quarter. So, whenever there is an update in the Homegrown System application, Company A’s Salesforce organization must get updated with the latest data. Since the company needs to update 20 million records and the start method can only query up to 50,000 records, running the batch for every 50,000 records would impact the org's health. So, the company chooses Bulk API 2.0 to upload the large dataset as it is capable of processing 100 million records per 24-hour period which meets the needs of Company A.

Usage Limits and Other Considerations

When defining API Consumption limits, consider the data characteristics of the organization that you use for backup.

Some Salesforce organizations may have a greater number of records that are not necessarily large, while other organizations may have fewer, data-heavy records. Data sets with more records might consume a large number of batch API calls, even if they are not data-heavy. During this situation, Bulk API 2.0 can be combined with the Bulk API method at the same time to optimize the usage of consumed APIs.

NOTE: Maximum volume of data per daily backup is set to 300 GB by default. Users can configure this limit.

This new version of Bulk API is vital to your business to keep secure data up to date within your Salesforce instance. The real benefit is the time savings for teams. With the elimination of manually-created batches and the limit requirement change, downtime is reduced once there is parallel processing. This makes it easier for large amounts of data to be processed within shorter time periods, ensuring your organization’s data is secure, and stored and synced properly within your Salesforce CRM.