Activating Audiences with Customer Data Platforms and Machine Learning

Customer Data Platforms (CDPs) are leading the movement to help optimize digital flow and deliver hyper-personalized experiences. CDPs aggregate individual user data from disparate systems into a centralized location. In many cases, they also include built-in AutoML tools that aim to streamline the process of applying out-of-the-box modeling solutions to common data sets.

The ultimate goal of these tools is to improve customer lifetime value (CLV) by helping companies deliver real-time, relevant, and personalized experiences across digital and non-digital channels.

With so many options available, it’s easy to get stuck in a solution-forward mindset, which focuses on using a specific tool rather than accomplishing the business objectives.

Instead, we recommend taking a problem-first approach that uses business objectives to guide problem-solving, allowing flexibility for course correction along the way. This helps us implement the pre-packaged or customized solution that will drive the most impact in alignment with the business objectives.

We recently worked with our client, Invaluable, to leverage the data in their CDP. We used a problem-first approach to develop a custom machine learning model that identifies meaningful audience segments for effective on-site personalization.

Invaluable is the leading online marketplace for fine art, antiques, and collectibles. They help auction houses, galleries, and dealers deepen their client relationships by connecting people to over 4,000 of the world’s most premier sellers.

In this blog post, we share how we progressed through our Data Science Lifecycle, how we utilized a problem-first approach, and some of the challenges we experienced along the way.

Problem-First Approach

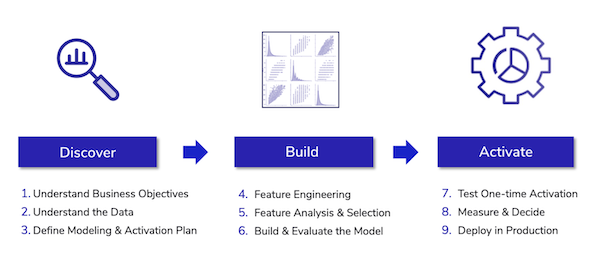

At Bounteous, we use a problem-first approach throughout the three stages of our Data Science Life Cycle: Discover, Build, and Activate. This helps us avoid becoming too set on a specific solution and allows us to keep the focus on driving measurable impact that addresses the business problem.

Discover

The Discover Stage is focused on gaining a solid understanding of business objectives and available data. It requires strong communication between the domain experts and data experts.

For any model to be useful, we must keep the business context central and identify an activation plan upfront. The knowledge we gain during this stage will help us choose the most appropriate machine learning model and design an effective testing/deployment plan.

Build

The Build Stage is focused on heads-down, technical data science work. This is when we create contextually meaningful features for a contextually meaningful model.

Activate

The Activate Stage is focused on implementing the activation plan to create measurable, valuable impact. It’s a key collaborative phase that requires the input of various teams. For example, our Advanced Analytics Team may work with the Marketing Services Team to target audiences with paid media to increase click-through rates.

Stage 1: Discover

We kicked off our problem discovery with Invaluable by conducting a series of stakeholder interviews. During these interviews, we uncovered their business objectives and discovered that they have three key audiences who purchase from their website:

Collectors: This audience buys for their own personal collection. While many Collectors are single purchasers, there is a sub-group of Collectors who make several purchases and engage with various product categories.

Brokers: This audience buys on behalf of collectors. They likely have a book of clients with varying interests and tastes that lead to a wide range of viewing, bidding, and buying behaviors.

Dealers: This audience owns galleries or antique stores. They purchase for the purpose of selling later to collectors. This audience is very conscious of fees associated with purchasing fine art, antiques, and collectibles.

Quantitative evidence indicated that Brokers may have been using the site solely for research purposes before circumventing the site when actually bidding on or purchasing items. This revealed a potential leak in Invaluable’s conversion funnel, resulting in unrealized auction fees.

Our aim was to either identify and validate these three audiences or uncover other audiences by modeling their website and purchase behavior. Our activation plan included ways to engage with the audiences:

- Develop personalized on-site experiences and targeted marketing campaigns for each audience

- Develop a loyalty program for Brokers and incentivize them to bid or purchase through the site

We also needed a strong understanding of Invaluable’s data ecosystem. During our client stakeholder meetings, we identified two relevant sources of data:

User Behavior: Invaluable’s CDP, Interaction Studio (formerly Evergage), has extensive historical user engagement data, including purchases, bids, and other auction interactions. For a full breakdown of Evergage data, see their data dictionary.

Self-Identified User Roles: This data consists of survey results where users selected their own audience type from a list of more than 30 roles. We consolidated these detailed roles into the three main audience groups: Collectors, Brokers, and Dealers.

Pro Tip: User behavior data can be vast, sparse, and noisy. For a thorough discovery process, we strongly recommend that you perform a data inventory and brief analysis to ensure that there are enough relevant, quality features available for modeling purposes.

Exploring the data early on is a key step in most modeling projects. In this case, it helped us to:

- Identify outliers and missing data from a quality and contextual perspective

- Identify which variables may be most telling signals for the model

- Inspire another pass at feature engineering

This exercise helped us map data requirements and vet our approach to solving Invaluable’s business problem.

Pro Tip: Understanding the business allows us to create meaningful solutions. Understanding the data helps us ensure our solutions are feasible.

Stage 2: Build

Our thorough Discovery Stage gave us a strong foundation for creating and analyzing possible model features, allowing us to move more quickly through the Build Stage.

Supervised Learning for Known Audience Classification

We started with a supervised learning approach that leveraged the Self-Identified User Roles data as a training set in order to classify users as Collectors, Brokers, or Dealers.

Using standard supervised techniques, including Random Forest, Linear Regression, and Support Vector Clustering (SVC), we fit and tuned several models, iterating several times through different sets of features that could represent defining behaviors.

Unfortunately, we kept hitting a performance ceiling. By evaluating the Weighted F1 Score, we saw that our models classified Collectors significantly better than Brokers and Dealers.

Pro Tip: With multi-class classification, the F1 Score is often a much better indicator of performance than the Accuracy Score, especially when the groups being classified are unbalanced. The F1 Score considers the combination of Precision and Recall for each group, providing a better understanding of the strengths and weaknesses of your model.

We suspected some underlying consistency in the training set among Collectors that was driving strong model performance that was unfortunately not evident for Brokers and Dealers. We realized that unlike Collectors, Brokers and Dealers were often bidding on items for other end-users. We tried to circumvent this issue by combining them into a single group of “proxy” bidders. We chose to label our new combined group as "Professionals."

However, even with the new Professionals group, the new set of models improved only nominally. Our attempts at redefining our target variables were fruitless, and no amount of feature engineering or hyperparameter tuning would increase model performance.

In our blog on Lead Scoring with Salesforce Data in the Adobe Experience Platform, we demonstrate the effect of enhanced feature engineering on model performance. This is often the biggest lever that data scientists have to pull, so when it fails to move the needle, it can be a sign of a poor quality training set.

Subjectivity is a common issue with self-identified data. In the Self-Identified User Roles training dataset, the roles in the form list may not have been well defined to the user, the user may not have cared enough to accurately self-identify, or the user may have had incentive to choose a role different from their own.

Pro Tip: Quality training sets for supervised learning can be difficult to find. If you have the resources, we recommend manually tagging or reviewing the training set for consistency and accuracy. Just remember: your model is only as good as your data.

We had anticipated that the quality of our training set could limit our model performance. Turning back to the business objective, we decided to try another approach.

Unsupervised Learning for New Audience Discovery

This time, we took an unsupervised learning approach. If we couldn’t classify specific roles, then maybe we could find clusters of users that could either map back to the original roles or uncover new audiences of interest.

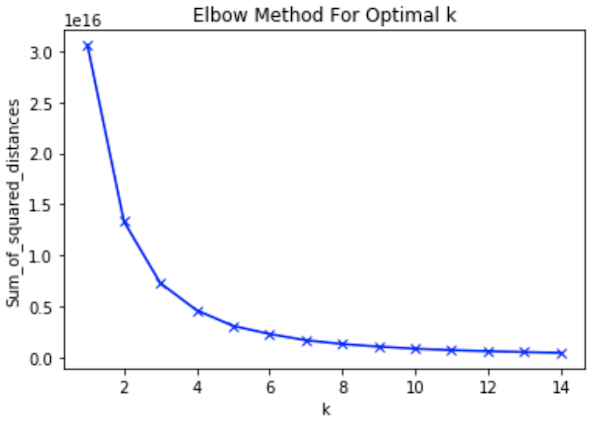

K-means is one of the most popular unsupervised clustering methods. In k-means clustering, you must specify the number of clusters (in this case audiences) you want to see in the results. The Elbow Method is often used to determine k. It works by fitting a k-means model for each k in a range that you specify. It then plots the sum of squared distances (or error) for each k. To find the optimal k, you look for the "Elbow" on the graph. The Elbow represents the point of diminishing returns from increasing the number of clusters to reduce error.

We plotted the sum of squared distances for 1-15 clusters and found the Elbow at 4 clusters. Therefore, we fit our final k-means model with 4 clusters and were left with audiences labeled 1-4.

When we joined our cluster numbers back to the behavioral data and analyzed the results, we were not able to identify any meaningful patterns that we could use to summarize and label each audience. Without a meaningful label, it would be almost impossible to activate on each audience.

Pro Tip: The difficulty with clustering techniques is that the model doesn’t tell you what those clusters represent. It’s up to you to find meaning in them and apply labels to your resulting audiences.

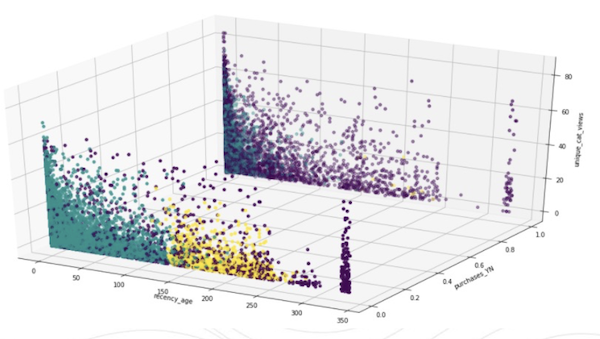

Thankfully, k-means is not the only unsupervised clustering method out there. HDBSCAN is a density-based clustering algorithm (unlike k-means which is a centroid-based or distance-based algorithm). It’s less sensitive to noisy outliers, and it allows clusters of varying size, density, and shape to be identified.

Using this method, we identified three very distinct clusters.

When analyzing these clusters to figure out which characteristics made them distinct, we found clear differences between each audience when looking at their:

- Purchase Power - the number of times customers purchased and revenue per purchase

- Activity Levels - customer site engagement through frequency, recency, and other interactive behaviors

Based on their characteristics, we were able to label the clusters and write a profile for each one to explain what made them unique. We chose representative names for each cluster: Potential Buyers, Super Buyers, and Cold Users.

While our original plan was to identify “Brokers,” we prioritized the business objectives to identify the leak in the conversion funnel. The Potential Buyers and Cold Users could be targeted to increase user retention, engagement, and revenue

It was a long process, but we had finally discovered a truly meaningful model output that we could use in our activation plan. This goes to show the power of problem-first thinking—data science is not always a straight path, but with enough business context, creativity, and perseverance, we can uncover meaningful results in almost any dataset.

Stage 3: Activate

The Activate Stage is where we bring our original activation plan to the forefront. This stage typically involves collaboration across many different teams, and its main purpose is to put the model results to use. Our activation plan for Invaluable included creating tests for delivering personalized experiences and creating marketing strategies for targeting users with loyalty programs.

Using the model results, Invaluable can improve customer lifetime value by personalizing experiences for Potential Buyers, Super Buyers, and Cold Users via:

- Site content personalization

- Special promotions for high-value customers

- Email targeting personalization

- A/B testing

The first step on our activation roadmap was an on-site A/B test to surface the Buyer Parity offer to the Potential Buyers. The purpose of this test was to build confidence in online bidding and reduce the tendency to leave the auction site and bid directly with auction houses.

The process doesn’t end here. We built a great foundation for business impact, but there is always room for iteration and automation to fine-tune results and (most importantly) expand the impact of activation.

The Future of Data Science

As CDPs and other tools for hyper-personalization and journey orchestration become more prevalent, marketers can capitalize on these platforms by streamlining the analytics process and providing increased modeling power.

While automation is always a goal when it comes to digital flow and creating personalized customer experiences at every touchpoint, it's important not to be caught up in solution-forward thinking. By keeping the business problem central and staying flexible about solutions and how they are implemented, digital marketers can ensure their machine learning solutions are meaningful and effective.

Data science is an art form, and meaningful problem solving still requires a combination of business context, creativity, and flexibility.